This week’s Spotlight is on Dr. Dan Ciresan, a senior researcher at IDSIA in Switzerland and a pioneer in using CUDA for Deep Neural Networks (DNNs).

This week’s Spotlight is on Dr. Dan Ciresan, a senior researcher at IDSIA in Switzerland and a pioneer in using CUDA for Deep Neural Networks (DNNs).

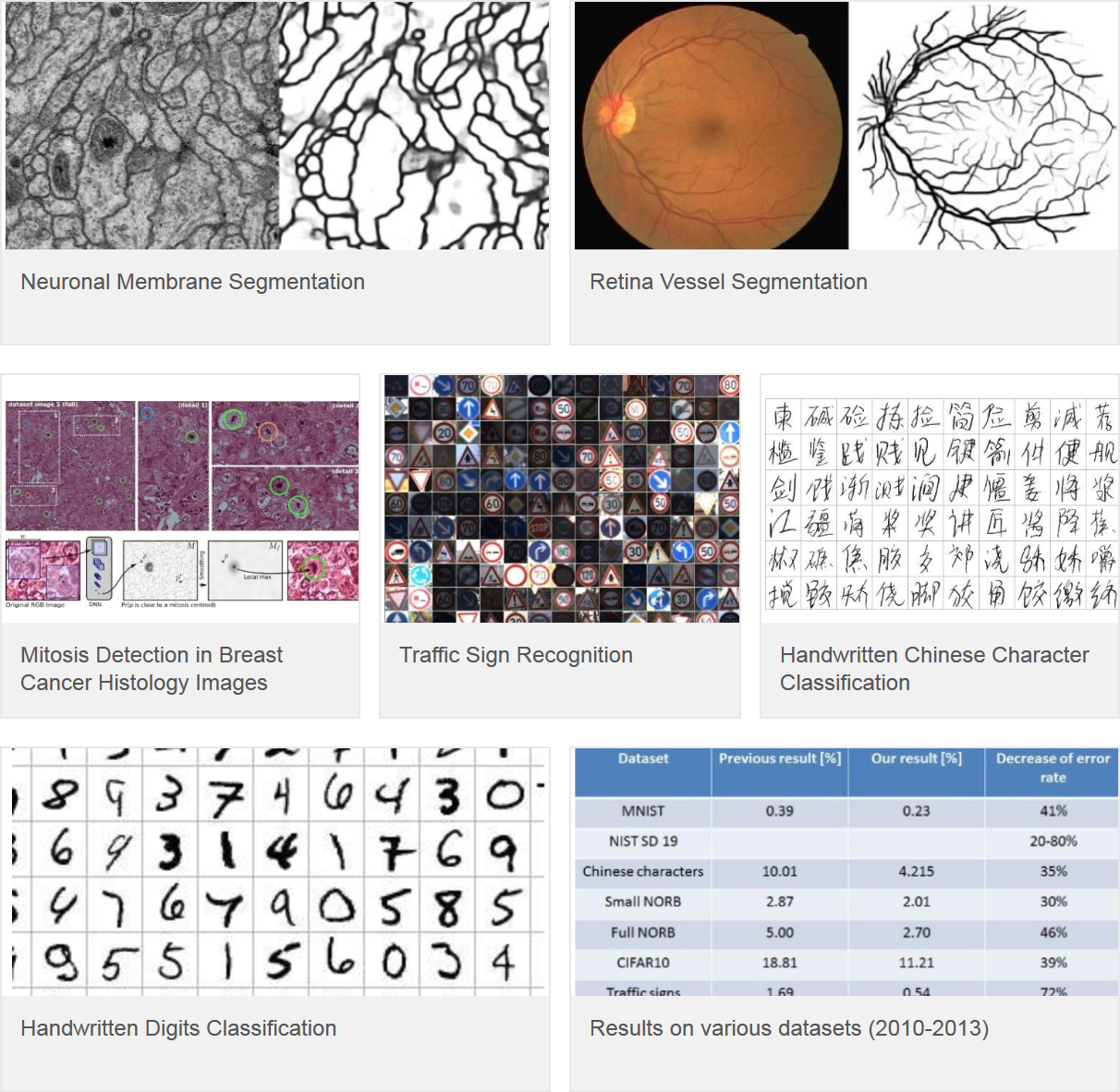

His methods have won international competitions on topics such as classifying traffic signs and recognizing handwritten Chinese characters. Dan presented a session on Deep Neural Networks for Visual Pattern Recognition at GTC in March 2014.

NVIDIA: Dan, tell us about your research at IDSIA.

Dan: I am continuously developing my Deep Neural Network framework and looking for new interesting applications. In the last three years we have won five international competitions on pattern recognition and improved the state of the art by 20-40% on many well-known datasets. One of our current projects involves developing an automatic system for trail following. When ready, we plan to mount it on a quadcopter and let it navigate through the woods.

NVIDIA: Why is Deep Neural Network research important?

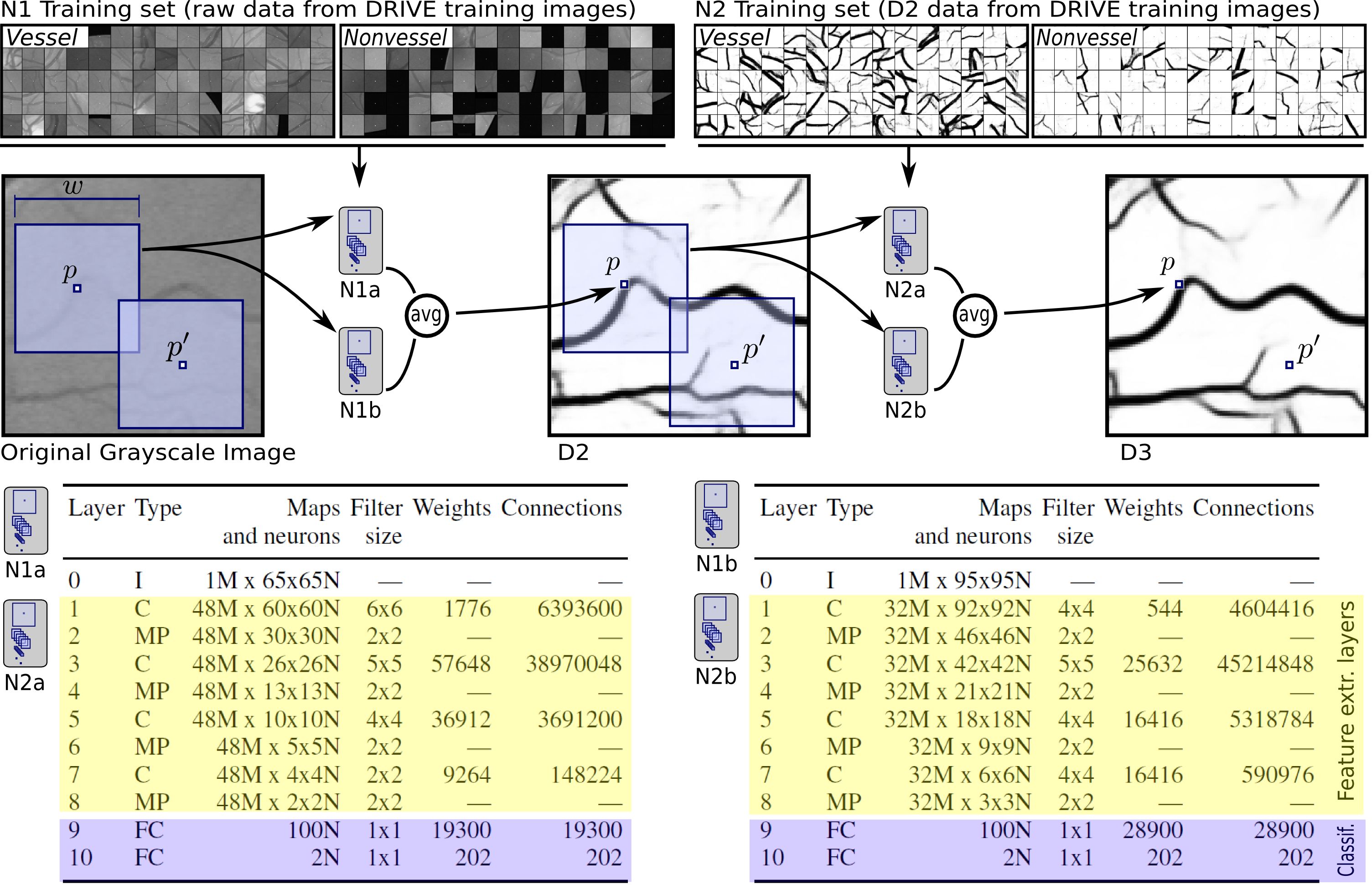

Dan: DNNs are arguably the best method for most pattern recognition problems. They already perform at human level on many important tasks like handwritten character recognition (including Chinese), neuronal membrane segmentation for connectomics, various automotive problems (traffic sign detection, lane detection, pedestrian tracking, and automatic steering), and mitosis detection. They are a general method that does not rely on human designed features. Instead, DNNs learn their features from labeled samples. I think they are our best chance of having human-like perception and understanding in our devices.

NVIDIA: In what way is GPU computing well-suited to DNNs?

Dan: DNNs are massively parallel structures. They are built out of thousands to millions of identical units which perform the same computation on different data. Most of these units have no data interdependency, thus they can compute simultaneously.

Training such a network is difficult on normal CPU clusters because during training there is a lot of data communication between the units. When the entire network can be put on a single GPU instead of a CPU cluster, everything is much faster: communication latency is reduced, bandwidth is increased, and size and power consumption are significantly decreased. One of the simplest but also the best training algorithms, Stochastic Gradient Descent, can run up to 40 times faster on a GPU as compared to a CPU.

NVIDIA: How many GPUs are you currently using for a given training run?

Dan: My code runs on one GPU, but I train multiple nets in parallel and then I average their results (the Multi-Column DNN method, which I introduced in my CVPR 2012 paper). Now that I am working with bigger and bigger problems I am planning to use multiple GPUs to train even bigger networks.

NVIDIA: What is the size of training data you’re working with? How long does it typically take to train?

Dan: It depends on the problem. The smallest one has tens of megabytes, the biggest one tens of gigabytes. Training time can range varies from two hours to four weeks.

NVIDIA: Roughly speaking, how many FLOPS does it take to train a typical DNN?

Dan: A rough estimation would be from 1 to 1000 PFLOPS

NVIDIA: Are you continuing to see better accuracy as the size of your training sets increase?

Dan: Yes, accuracy still continues to improve as the size of the training set increases. I have run experiments on the Chinese characters dataset (in 2011 it was quite big, i.e. over 3GB) and the neural network model continued to improve as I was using more data for training. Datasets are becoming bigger mostly because they have far more classes (categories). Several years ago datasets had on average tens of classes with up to tens of thousands of samples per class. Now we are tackling problems with thousands or even tens of thousands of classes. Discerning between so many classes is difficult because many of them tend to be very similar. This is why we need sufficient samples in each class, thus a huge amount of labeled samples.

NVIDIA: What challenges did you face in parallelizing the algorithms you use?

Dan: Before starting to write CUDA code I mainly wrote sequential CPU code. Going from one thread to tens of thousands required me to completely change how I was thinking. It was a very steep learning curve, but the results were impressive: after several days I was able to speed up one of the kernels by a factor of 20 compared with the original CPU version.

NVIDIA: Which CUDA features and GPU libraries do you use?

Dan: My code makes heavy use of function templates. They allow me to unroll the innermost loops of the algorithm and speed up the kernels over 100%. I developed my code on the Tesla (GTX 280) and Fermi (GTX 580) architectures. Currently I am analyzing porting the framework on Kepler and Maxwell. The new texture cache and shuffle instructions look especially promising.

In 2008, when I started to implement my DNN there were no dedicated libraries. CUBLAS could be used for simple networks like Multi-Layer Perceptrons, but not for Convolutional NNs. Having no other option, I implemented all my code directly in CUDA.

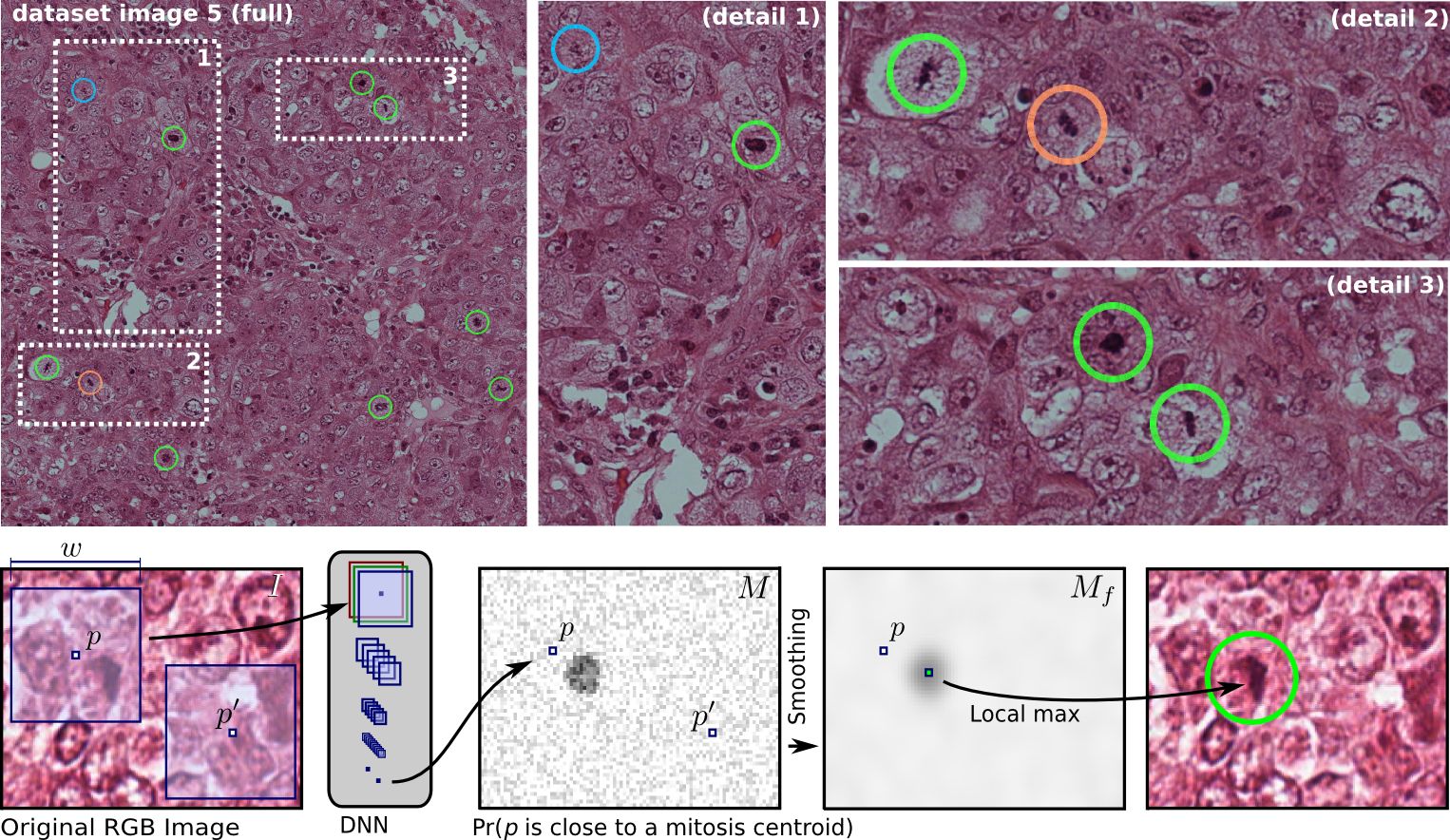

NVIDIA: Tell us about the MICCAI 2013 Grand Challenge on Mitosis Detection.

Dan: This is the latest competition in which my colleague, Alessandro Giusti, and I participated. We previously used DNNs to win five other competitions in the fields of handwritten character recognition, automotive vision systems and biomedical imaging.

Mitosis detection is important for cancer prognosis, but difficult even for experts. In 2012 our DNN won the ICPR 2012 Contest on Mitosis Detection in Breast Cancer Histological Images. The MICCAI 2013 Grand Challenge had a far more challenging dataset: more subjects, higher variation in slide staining, and difficult and ambiguous cases. Fourteen research groups (universities and companies) submitted results. Our entry was by far the best (26.5% higher F1-score than the second best entry).

NVIDIA: Do you see DNNs being used for applications beyond visual pattern recognition?

Dan: Visual pattern recognition is by itself a huge field, comprising classification, detection and segmentation of any visual input, including normal 2D images and videos, but also depth images, multispectral images, etc. Furthermore, DNNs are already achieving state of the art results for speech recognition and for natural language processing (NLP). I have run some preliminary experiments for speech recognition, and DNNs work really well.

NVIDIA: When did you first see the promise of GPU computing?

Dan: Back in 2006 I was working on a new variant of a Hough transform for line detection. The algorithm is computationally intensive but easy to parallelize on GPUs, as there are thousands of lines that can be searched for in parallel. I was considering rewriting the algorithm with graphics primitives (CUDA was not yet released), but after careful consideration I decided it would be too time consuming to do it.

In 2008 I was in the last year of my Ph.D. program and I was running experiments on 20 computers for my thesis. Even so, I had to use small networks because I had limited time to train them. Then my Ph.D. adviser bought a GTX 280 the week it was released. I spent much of that summer optimizing my code in CUDA. That was the beginning of the first huge convolutional DNN.

NVIDIA: What will we see in the next five to ten years with DNNs?

Dan: We need better algorithms that can be easily distributed over multiple GPUs or computing nodes. I think DNNs will be ubiquitous in all pattern recognition-related problems. DNNs are already beginning to be used in industry. Our computers, tablets, phones and other mobile devices will have human-like perception.

DNNs will have a huge impact in the bio-medical field. They will be used for developing new drugs (e.g. automatic screening), as well as for disease prevention and early detection (e.g. glaucoma, cancer). They will help improve our understanding of the human brain.

Right now they are the best candidate for the initial stage of building the connectome. In turn, we will use those insights into the physiology of the brain to improve upon the systems that are currently being used to understand the brain.

And, DNNs will finally bring autonomous cars on the streets. DNNs are more robust than current non-NN methods. Most autonomous cars are relying exclusively on LIDAR & RADAR acquired information. DNNs can be used both to analyze that 3D information and even replace or enhance LIDAR with normal, simple vision-based navigation.

Read more GPU Computing Spotlights.