Thrust is a parallel algorithms library which resembles the C++ Standard Template Library (STL). Thrust’s High-Level interface greatly enhances programmer Productivity while enabling performance portability between GPUs and multicore CPUs. Interoperability with established technologies (such as CUDA, TBB, and OpenMP) facilitates integration with existing software. Develop High-Performance applications rapidly with Thrust!

This excerpt from the Thrust home page perfectly summarizes the benefits of the Thrust library. Thrust enables expressive algorithmic programming via a vocabulary of parallel building blocks that let you rapidly develop fast, portable parallel algorithms. If you are a C++ programmer, and especially if you use template libraries like the STL and Boost C++ libraries, then you will find Thrust familiar. Like the STL, Thrust helps you focus on algorithms, rather than on platform-specific implementation details. At the same time, Thrust’s modular design allows low-level customization and interoperation with custom platform-specific code such as CUDA kernels and libraries.

This excerpt from the Thrust home page perfectly summarizes the benefits of the Thrust library. Thrust enables expressive algorithmic programming via a vocabulary of parallel building blocks that let you rapidly develop fast, portable parallel algorithms. If you are a C++ programmer, and especially if you use template libraries like the STL and Boost C++ libraries, then you will find Thrust familiar. Like the STL, Thrust helps you focus on algorithms, rather than on platform-specific implementation details. At the same time, Thrust’s modular design allows low-level customization and interoperation with custom platform-specific code such as CUDA kernels and libraries.

Thrust is High-Level

As described in the article “Thrust, a Productivity-Oriented Library for CUDA”, Thrust aims to solve two types of problems: problems that can be “implemented efficiently without a detailed mapping to the target architecture”, and problems that don’t merit or won’t receive (for whatever reason) significant optimization attention from the programmer. High-level primitives make it easier to capture programmer intent; developers describe what to compute, without dictating how to compute it. This allows the library to make informed decisions about how to implement the intended computation.

Thrust provides an STL-style vector container (with host_vector and device_vector implementations), and a suite of high-level algorithms including searching, sorting, copying, merging, transforming, reordering,reducing, prefix sums, and set operations. Here is an oft-repeated complete example program from the Thrust home page, which generates random numbers serially and then transfers them to the GPU where they are sorted.

#include <thrust/host_vector.h>

#include <thrust/device_vector.h>

#include <thrust/generate.h>

#include <thrust/sort.h>

#include <thrust/copy.h>

#include <algorithm>

#include <cstdlib>

int main(void)

{

// generate 32M random numbers serially

thrust::host_vector h_vec(32 << 20);

std::generate(h_vec.begin(), h_vec.end(), rand);

// transfer data to the device

thrust::device_vector d_vec = h_vec;

// sort data on the device

thrust::sort(d_vec.begin(), d_vec.end());

// transfer data back to host

thrust::copy(d_vec.begin(), d_vec.end(), h_vec.begin());

return 0;

}

Here is another complete example program that uses thrust::reduce_by_key to compute a run-length encodingof an array of characters. This example also demonstrates the simplest of Thrust’s “fancy iterators”, thrust::constant_iterator, which simply returns the same value (1 in this case) every time it is dereferenced.

#include <thrust/device_vector.h>

#include <thrust/copy.h>

#include <thrust/iterator/constant_iterator.h>

#include <thrust/reduce.h>

#include <iostream>

#include <iterator>

int main(void)

{

// input data on the host

const char data[] = "aaabbbbbcddeeeeeeeeeff";

const size_t N = (sizeof(data) / sizeof(char)) - 1;

// copy input data to the device

thrust::device_vector input(data, data + N);

// allocate storage for output data and run lengths

thrust::device_vector output(N);

thrust::device_vector lengths(N);

// print the initial data

std::cout << "input data:" << std::endl;

thrust::copy(input.begin(), input.end(),

std::ostream_iterator(std::cout, ""));

std::cout << std::endl << std::endl;

// compute run lengths

size_t num_runs = thrust::reduce_by_key(

input.begin(), input.end(), // input key sequence

thrust::constant_iterator(1), // input value sequence

output.begin(), // output key sequence

lengths.begin() // output value sequence

).first - output.begin(); // compute output size

// print the output

std::cout << "run-length encoded output:" << std::endl;

for(size_t i = 0; i < num_runs; i++)

std::cout << "(" << output[i] << "," << lengths[i] << ")";

std::cout << std::endl;

return 0;

}

Other fancy iterators include counting_iterator, discard_iterator, permutation_iterator, reverse_iterator, transform_iterator, and zip_iterator. For more information on fancy iterators, check out the Thrust Quick Start Guide.

Thrust is High-Performance

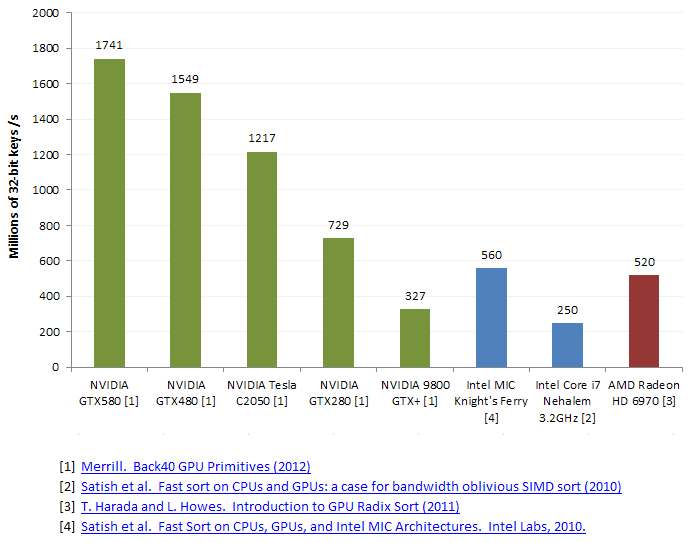

Focusing on a concise set of useful high-level primitives allows the Thrust developers to put a lot of effort into tuning the implementation of the primitives for high performance. As a software abstraction layer, Thrust allows existing applications to benefit transparently as the state-of-the-art in algorithm design and performance tuning evolve. As an example, Thrust’s radix sort uses Duane Merrill’s expert optimized code, resulting in the fastest single-processor sorting performance to be found anywhere (see the following comparison chart), and this code is continuously improving.

Being a C++ template library also provides some efficiency advantages to Thrust. Because template implementations are resolved at compile time, compositions of thrust algorithms can be automatically fused into a single kernel that runs on the device, amplifying computational intensity and reducing bandwidth bottlenecks. A simple example of this is thrust::transform_reduce, which combines an arbitrary transformation function with a parallel reduction. Thrust enables more advanced fusion by providing thrust::transform_iterator, which allows custom transformations to be applied to every element of a vector and use the result as input to any other Thrust algorithm. Below is a simple example from Jared Hoberock’s GTC 2010 presentation Thrust By Example that demonstrates fusing thrust::transform_iterator withthrust::reduce to efficiently compute a vector norm.

// define transformation f(x) -> x^2

struct square

{

__host__ __device__ float operator()(float x) { return x * x; }

};

// fusion with transform_iterator

return sqrt(reduce(make_transform_iterator(x.begin(), square()),

make_transform_iterator(x.end(), square()),

0.0f,

plus()));

Thrust is Open Source

Thrust is free and open-source software provided under the Apache 2.0 license. Thrust is under active development. The primary developers are Jared Hoberock and Nathan Bell (with contributions from others). Multiple commercial, research, and open-source software projects use Thrust. Here are some examples.

- odeint, featured at last month’s C++ Now conference, uses Thrust extensively to parallelize ordinary differential equation solvers.

- Alenka is a query engine built almost entirely with Thrust.

- Cusp is a library for sparse linear algebra and graph computations on CUDA.

Thrust is Interoperable and Portable

No language, library, or tool is a perfect solution for every problem, so interoperability is crucial. At the most basic level, Thrust provides simple APIs for wrapping CUDA device pointers so that they can be passed to any Thrust algorithm, and for extracting device pointers from Thrust vectors, which makes it interoperable with the entire CUDA ecosystem of compilers, libraries, and tools.

Thrust is not just a CUDA library. The latest versions of Thrust are also interoperable with parallel computing frameworks for multicore CPUs, via backend support for OpenMP and Threading Building Blocks (TBB). Thrust applications can select among the CUDA, TBB, and OpenMP backends either at compile time, or by explicitly selecting a backed by using thrust::cuda::vector, thrust::omp::vector, or thrust::tbb::vector rather than justthrust::device_vector. In this way, not only can a single Thrust application combine multiple backends, but it can be portable across GPUs and multicore CPUs.

Hardware portability is challenging, because often what you want is not just functional portability, but the much more elusive performance portability. Thrust’s high-level interface enables it to incorporate carefully tuned implementations for each backend, providing performance portability across parallel frameworks and hardware architectures.

Thrust is Customizable

Many algorithms in Thrust are based on customization through the use of function objects, or “functors”. The vector norm example shown previously demonstrates this. The second argument to thrust::make_transform_iteratoris the square functor, which is simply a struct with an operator() method that squares its input. Each time thetransform_iterator is dereferenced, the square of the input value is returned. Many Thrust algorithms can take user-defined functor arguments that implement custom operators, transformations, and predicates. This enables sophisticated computations to be implemented with just a small amount of custom code.

With its tag-based algorithm dispatch system, Thrust version 1.6 enables developers to embed fine-grained customization into a Thrust program, letting them provide custom implementations for specific algorithms, rather than just specify functions called by the algorithms. Users can derive new tags and implement custom versions of algorithms, as shown on the Derived Systems wiki page.

Developers can also implement entirely novel parallel backends starting with Thrust 1.6, for example to target a different processor architecture or programming framework. As described on the Standalone Systems wiki page, “Building a stand-alone Thrust system is similar to customizing an existing one. However, because a standalone system has no derived algorithm implementations to rely on, all primitives must be implemented.”

Limitations of Thrust

There are a few limitations of Thrust. Some of these are by design, while others are just artifacts of the current implementation or current limitations of Thrust on the CUDA platform.

One limitation that I have seen come up a few times with users is the lack of a vocabulary for expressing multi-dimensional problems. This is by design; the Thrust designers view it as a “non-goal”, according to the Thrust Roadmap. A more modular approach would be to design a multi-dimensional data structures library that can interoperate with Thrust.

Thrust algorithms are largely synchronous on the GPU in their current design. For example, there is no current support for mapping thrust calls to CUDA streams, although it is on the roadmap. Some algorithms, such as thrust::reduceand thrust::inner_product, return their scalar results to the calling host thread, which makes them inherently synchronous since this requires calling a synchronous cudaMemcpy. Perhaps they could be made asynchronous in the future if and when support for CUDA streams is added.

As a template library, Thrust is entirely defined in header files, so any change results in a recompile of the Thrust code, rather than just a relink as with a binary library. However, the benefits of C++ template metaprogramming typically outweigh this inconvenience: you get device code that is constructed specifically for your architecture and problem type.

Finally, Thrust is not all things to all people. Many programmers need a productive, expressive interface to high-level algorithm primitives. When absolute flexibility and performance are secondary, and customization needs are minimal, Thrust can be a perfect fit. For those who need greater control over the device implementation of their algorithms, Thrust might not be a great fit. I have seen cases where attempts to map complex or multi-dimensional problems to Thrust’s containers and algorithms results in overly convoluted and brittle code (just as can happen with the STL). In these situations, Thrust can augment custom CUDA code or other libraries, but it shouldn’t replace them.

Summary

Thrust provides programmers with expressive algorithmic parallel programming. Its high-level interface, high-performance implementations, interoperability and portability make it a powerful tool for C++ programmers, so visit the Thrust home page and read the Quick Start Guide to begin coding at the speed of light! We plan to provide more Thrust examples and discussion here on Parallel Forall in the future, so make sure you come back!