In the previous CUDA C++ post we dove in to 3D finite difference computations in CUDA C/C++, demonstrating how to implement the x derivative part of the computation. In this post, let’s continue by exploring how we can write efficient kernels for the y and z derivatives. As with the previous post, code for the examples in this post is available for download on Github.

In the previous CUDA C++ post we dove in to 3D finite difference computations in CUDA C/C++, demonstrating how to implement the x derivative part of the computation. In this post, let’s continue by exploring how we can write efficient kernels for the y and z derivatives. As with the previous post, code for the examples in this post is available for download on Github.

Y and Z Derivatives

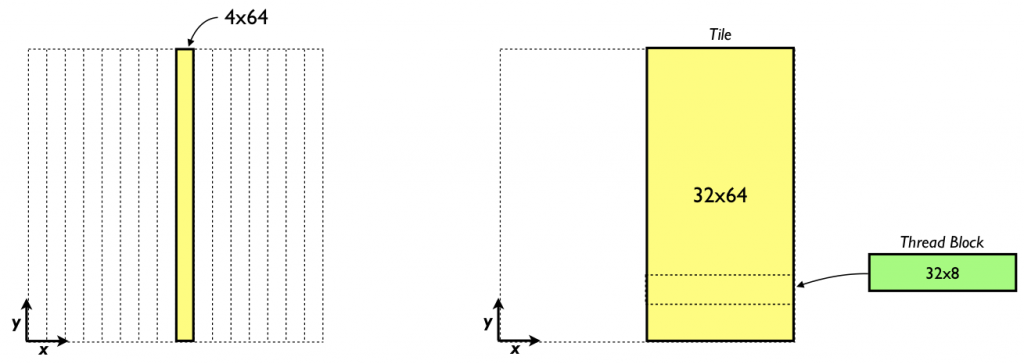

We can easily modify the x derivative code to operate in the other directions. In the x derivative each thread block calculates the derivatives in an x, y tile of 64 × sPencils elements. For the y derivative we can have a thread block calculate the derivative on a tile of sPencils × 64 elements in x, y, as depicted on the left in the figure below.

Likewise, for the z derivative a thread block can calculate the derivative in a x, z tile of sPencils × 64 elements. The kernel below shows the y derivative kernel using this approach.

__global__ void derivative_y(float *f, float *df)

{

__shared__ float s_f[my+8][sPencils];

int i = blockIdx.x*blockDim.x + threadIdx.x;

int j = threadIdx.y;

int k = blockIdx.y;

int si = threadIdx.x;

int sj = j + 4;

int globalIdx = k * mx * my + j * mx + i;

s_f[sj][si] = f[globalIdx];

__syncthreads();

if (j < 4) {

s_f[sj-4][si] = s_f[sj+my-5][si];

s_f[sj+my][si] = s_f[sj+1][si];

}

__syncthreads();

df[globalIdx] =

( c_ay * ( s_f[sj+1][si] - s_f[sj-1][si] )

+ c_by * ( s_f[sj+2][si] - s_f[sj-2][si] )

+ c_cy * ( s_f[sj+3][si] - s_f[sj-3][si] )

+ c_dy * ( s_f[sj+4][si] - s_f[sj-4][si] ) );

}

By using the shared memory tile we can ensure that each element from global memory is read in only once.

__shared__ float s_f[my+8][sPencils];

The disadvantage of this approach is that with sPencils or 4 points in x for these tiles we no longer have perfect coalescing. The performance results bear this out; we achieve roughly half the performance of the x derivative kernel.

Using shared memory tile of 4 x 64 RMS error: 7.277675e-06 MAX error: 2.861023e-05 Average time (ms): 0.044683 Average Bandwidth (GB/s): 46.933792

In terms of accuracy, we obtain the same maximum error of the x derivative, but a different (not substantially worse) RMS error for essentially the same function. This difference is due to the order in which the accumulation is done on the host, simply swapping the order of the loops in the host code error calculation would produce the same results.

One way to recover perfect coalescing is to expand the number of pencils in the shared memory tile. For the Tesla K20 (Kepler) this would require 32 pencils. Such a tile is shown on the right of the figure earlier in this section. Using such an approach would require a shared memory tile of 9216 bytes, which is not a problem. However, with a one-to-one mapping of threads to elements where the derivative is calculated, a thread block of 2048 threads would be required. The K20 has a limits of 1024 threads per thread block and 2048 threads per multiprocessor. We can work around these limits by letting each thread calculate the derivative for multiple points. If we use a thread block of 32×8×1 and have each thread calculate the derivative at eight points, as opposed to a thread block of 4×64×1, and have each thread calculate the derivative at only one point, we launch a kernel with the same number of blocks and threads per block, but regain perfect coalescing. The following code accomplishes this.

__global__ void derivative_y_lPencils(float *f, float *df)

{

__shared__ float s_f[my+8][lPencils];

int i = blockIdx.x*blockDim.x + threadIdx.x;

int k = blockIdx.y;

int si = threadIdx.x;

for (int j = threadIdx.y; j < my; j += blockDim.y) {

int globalIdx = k * mx * my + j * mx + i;

int sj = j + 4;

s_f[sj][si] = f[globalIdx];

}

__syncthreads();

int sj = threadIdx.y + 4;

if (sj < 8) {

s_f[sj-4][si] = s_f[sj+my-5][si];

s_f[sj+my][si] = s_f[sj+1][si];

}

__syncthreads();

for (int j = threadIdx.y; j < my; j += blockDim.y) {

int globalIdx = k * mx * my + j * mx + i;

int sj = j + 4;

df[globalIdx] =

( c_ay * ( s_f[sj+1][si] - s_f[sj-1][si] )

+ c_by * ( s_f[sj+2][si] - s_f[sj-2][si] )

+ c_cy * ( s_f[sj+3][si] - s_f[sj-3][si] )

+ c_dy * ( s_f[sj+4][si] - s_f[sj-4][si] ) );

}

}

Here we set lPencils equal to 32. This code is similar to the above y derivative code except the variable j is a loop index rather than being calculated once. When we use a large amount of shared memory per thread block, we should check to see the effect of whether and by how much the shared memory use limits the number of threads that reside on a multiprocessor. Each thread block requires 9216 bytes of shared memory, and with a limit of 48 KB of shared memory per multiprocessor, this translates to a maximum of 5 thread blocks that can reside at one time on a multiprocessor. (Compiling with --ptxas-options=-v indicates each thread uses 10 registers which will not limit the number of thread blocks per multiprocessor.) With a thread block of 32×8 threads, the number of threads that can reside on a multiprocessor for this kernel is 1280 versus a maximum of 2048 on the K20. The occupancy is 1280/2048 or 0.63, which is not a problem in general, especially since we are using eight-fold instruction-level parallelism via the loops over j. Here are the results for this kernel.

Using shared memory tile of 32 x 64 RMS error: 7.277675e-06 MAX error: 2.861023e-05 Average time (ms): 0.026755 Average Bandwidth (GB/s): 78.382972

You might wonder if using such a larger number of pencils in the shared memory tile will improve performance of the x derivative code presented earlier (81 GB/s). This ends up not being the case.

Using shared memory tile of 64 x 32 RMS error: 7.277675e-06 MAX error: 2.861023e-05 Average time (ms): 0.030123 Average Bandwidth (GB/s): 69.619162

Recall that for the x derivative we already have perfect coalescing for the case with four pencils. Since the occupancy of this kernel is high there is no benefit from the added instruction-level parallelism. As a result, the additional code to loop over portions of the shared-memory tile simply add overhead and as a result performance decreases. We handle the z derivative in the same way as the y derivative, and achieve comparable performance.

In the past several posts we have explored the use of shared memory to optimize kernel performance. The transpose example showed how shared memory can be used to help global memory coalescing, and in the finite difference code we showed how shared memory can assist with data reuse.