The need to train their deep neural networks as fast as possible led the Evolving Artificial Intelligence Laboratory at the University of Wyoming to harness the power of NVIDIA Tesla GPUs starting in 2012 to accelerate their research.

“The speedups GPUs provide for training deep neural networks are well-documented and allow us to train models in a week that would otherwise take months,” said Jeff Clune, Assistant Professor, Computer Science Department and Director of the Evolving Artificial Intelligence Laboratory. “And algorithms continuously improve. Recently, NVIDIA’s cuDNN technology allowed us to speed up our training time by an extra 20% or so.”

Clune’s Lab, which focuses on evolving artificial intelligence with a major focus on large-scale, structurally organized neural networks, has garnered press from some of the largest media outlets, including BBC, National Geographic, NBC News, The Atlantic and featured on the cover of Nature in May 2015.

[The following video shows off work from the Evolving AI Lab on visualizing deep neural networks. Keep reading to learn more about this work!]

For this Spotlight interview, I had the opportunity to talk with Jeff Clune and two of his collaborators, Anh Nguyen, a Ph.D. student at the Evolving AI Lab and Jason Yosinski, a Ph.D. candidate at Cornell University.

Brad: How are you using deep neural networks (DNNs)?

We have many research projects involving deep neural networks. Our Deep Learning publications to date involve better understanding DNNs. Our lab’s research covers:

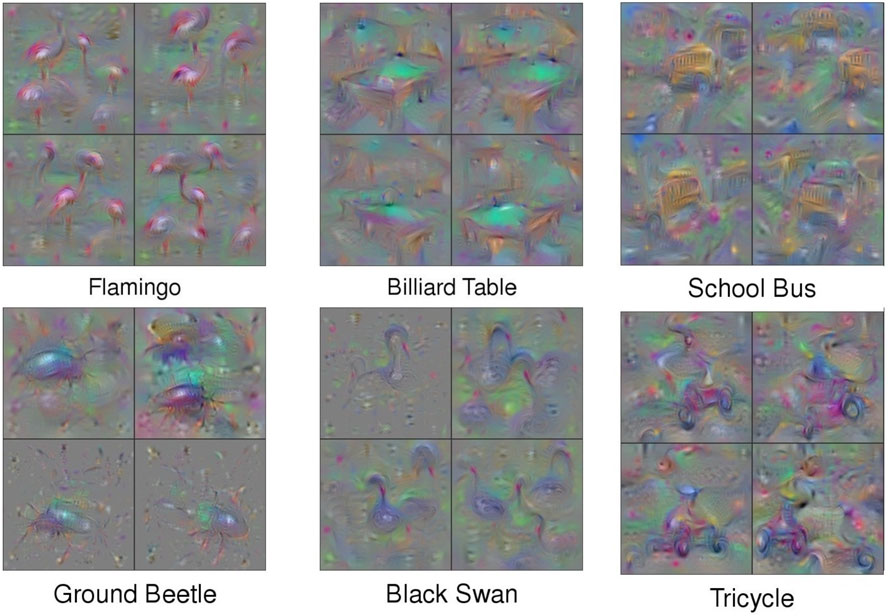

Deep Visualization: This work investigates how DNNs perform the amazing feats that they do. In a new paper, we create images of what every neuron in a DNN “wants to see”, allowing us to visualize what features the network learned (Figure 1).

- Transfer Learning: We investigate how to best transfer features learned from one (usually large) data set to another (usually small) dataset. Our talk at NIPS and the corresponding paper provide surprising insights about transfer learning and practical advice for those who want to take advantage of it.

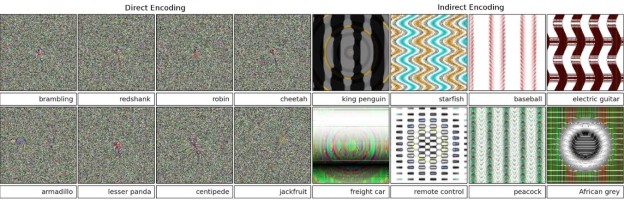

- Fooling DNNs: Our paper “Deep Neural Networks are Easily Fooled” highlighted the differences between how DNNs and humans see the world, and showed that it is easy to generate images that are completely unrecognizable to humans (e.g. TV static, Figure 2) that DNNs will declare with certainty to be things like starfish and motorcycles. That paper thus raises concerns about the security and reliability of applications that use DNNs, and motivates research into fixing this problem.

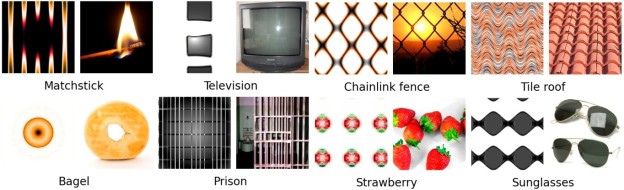

- Innovation Engines: We are also using DNNs to train AI to be creative and automatically produce art (and eventually produce creative solutions to challenging engineering problems). We wrote more about that in the Innovation Engine paper. (Figure 3)

B: Your recent research on being able to fool DNNs received a lot of attention. Can you share some background and details on that project?

Deep neural networks have recently been achieving state-of-the-art performance on a variety of pattern-recognition tasks, most notably visual classification problems. In this research, we point out a limitation of these networks, which is that they are easily fooled. Specifically, we can produce unrecognizable images (called “fooling images”, Figure 2) that the networks believe with near certainty to be familiar objects (e.g. a school bus, tiger, etc.).

This video describes the main results, methods, and implications of the work.

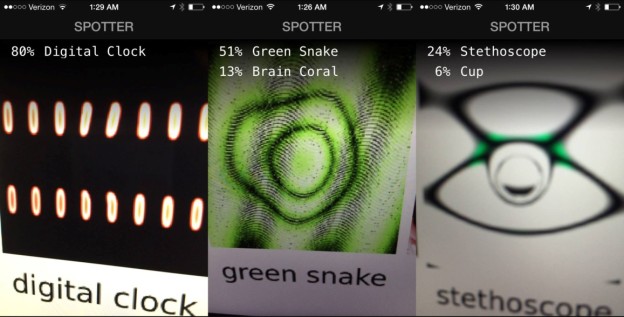

We demonstrate this fooling phenomenon on DNNs of different architectures (LeNet, AlexNet, and GoogLeNet) and DNNs trained on different datasets (MNIST and ImageNet). Surprisingly, the fooling examples generalize across networks trained with different architectures and random initializations. Dileep George even tried pointing his cell phone DNN app at a laptop that had our fooling images pulled up and the app was fooled (Figure 4), showing that these images fool DNNs even when seen from different scales, angles, lighting conditions, and with different networks! We tried a few different techniques to train DNNs to be less easily fooled such as a) training a network to recognize fooling examples; and b) evaluating an image using an ensemble of models; however, they do not help ameliorate the problem.

The code, images and more details regarding this project can be found at: http://EvolvingAI.org/fooling.

B: Have you figured out what it will take to help DNNs ignore the illusions?

We tried a few different ways to help DNNs become immune to fooling images, but none of them worked. Many other teams are working on this issue as well. The fact that DNNs are easily fooled is a deep problem that does not afford an easy solution.

However, we have recently figured out how to synthetically produce images that cause a neuron to fire and that are recognizable. That does not solve the fooling problem (because unrecognizable images can also cause that neuron to fire), but producing these recognizable visualizations is very helpful because it tells us what the neuron has learned. We can do that either for a final, output neuron that corresponds to a specific class, such as school bus or motorcycle, or any intermediate neuron. That means we can now visualize all the features that are learned in a deep neural network! We think the images that result are pretty fascinating. They can be viewed here. A small set of examples is provided in Figure 1 above. The paper that describes how we make these images also describes a new “Deep Visualization Toolbox” that we are releasing as open-source that allows researchers to view, in real-time, how each neuron in a DNN responds to an image or a live webcam feed. Here is a video tour of the toolbox [ed.: also embedded at the top of the post]. Overall, these efforts are finally shining some light into these DNNs so we can understand how they work, which is important because they were previously regarded as rather impenetrable, inscrutable “black boxes.”

B: What do you see as being the biggest challenge for your research?

Speed. It takes us nearly six days to train an AlexNet DNN with Caffe. Other groups (like Baidu) can now train the same network in under nine hours! If we could similarly train networks that fast, we could iterate our scientific process faster, try more ideas, and train more powerful models.

Thus, current efforts by NVIDIA and other companies that speed up DNN training, especially by speeding up DNN packages like Caffe and Torch, are greatly helpful and will enable further advancements in our lab and for the entire field.

B: What computing technologies are you using?

As for the hardware infrastructure, we mainly work on the University of Wyoming’s Advanced Research Computing Center. Many people in the university and state government have had the vision and leadership to strongly support investments in high-performance computing for research, and their actions have enabled the research that we do.

On the software side, we harness a variety of different high-performance computing technologies such as Caffe, Sferes, MPI, TBB, and CUDA.

B: How do you decide when to use a deep learning framework versus writing your own code or using a CUDA library directly?

We often want to test new ideas as quickly as possible. If it is possible to test our idea within a framework, such as the Caffe Deep Learning framework or the Sferes evolutionary computation framework, we prefer to go that route.

B: Why Caffe over other frameworks?

We started using Caffe because it was simple to use to train networks on ImageNet and because we liked the ability to code in C++ and Python. We continue using it because it has a large active user base which tends to mean features are added from new papers quickly. The model zoo also offers a helpful place for developers to share models, and releasing our code on, and results for, such a popular framework makes it easy for the community to reproduce our results and use our code to make their own research advances.