This year’s ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is about to begin. Every year, organizers from the University of North Carolina at Chapel Hill, Stanford University, and the University of Michigan host the ILSVRC, an object detection and image classification competition, to advance the fields of machine learning and pattern recognition. Competitors are given more than 1.2 million images from 1,000 different object categories, with the objective of developing the most accurate object detection and classification approach. After the data set is released, teams have roughly three months to create, refine and test their approaches. This year, the data will be released on August 14, and the final result submission deadline is November 13. Read on to learn how competing teams can get free access to the latest GPU-accelerated cloud servers from NVIDIA and IBM Cloud.

About the Challenge

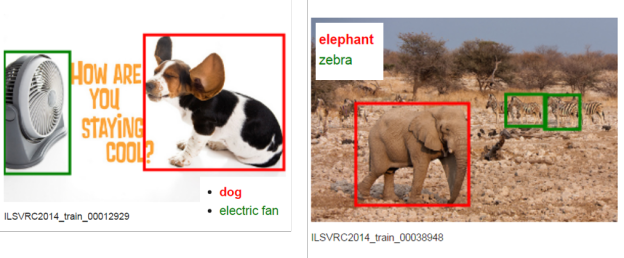

Teams compete annually to develop the most accurate recognition systems, and each year the sub-tasks are more complex and challenging. Since none of the algorithms developed to date are able to classify all images correctly 100% of the time, classification accuracy is measured in terms of error rates. In this way, the winning submission will exhibit the lowest overall percentage of incorrectly classified images. When ILSVRC started in 2010, teams had a single assignment: classify the contents of an image (identify objects) and provide a list of the top five classifications with their respective probabilities. Since then, the complexity of assignments has grown as the organizers have added sub-tasks for classification with localization and with detection (identifying objects and specifying their locations with bounding boxes). Specifying bounding boxes is important because most pictures contain multiple objects. To illustrate the requirements of this type of task, Figure 1 shows two sample ImageNet training images annotated with their object categories and bounding boxes.

GPUs: a Winning Trend

ILSVRC competitors include teams from both industry and academia. In previous years, entries have come from the IBM T.J. Watson Research Center, the University of Tokyo, NYU, Microsoft Research, UC Berkeley, Adobe, Google, Baidu, Oxford University, Virginia Tech, MIT, and UC Irvine, among others.

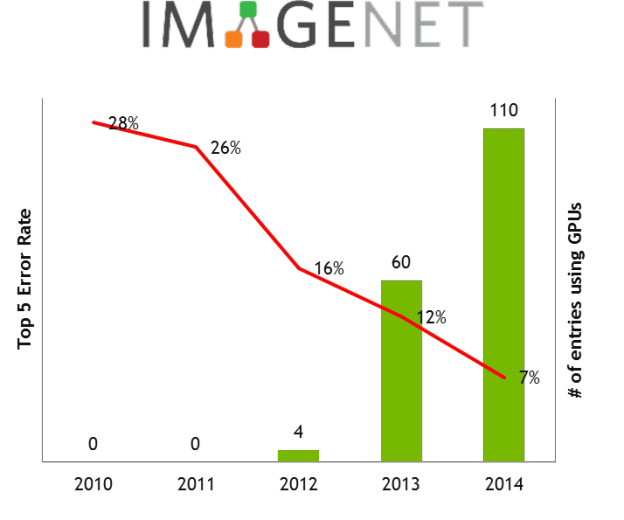

In the inaugural 2010 challenge, NEC Labs America and UIUC won, and Xerox Research Centre Europe received an honorable mention. The winning teams in 2010 and 2011 achieved error rates for the classification tasks of 0.28 and 0.26, respectively. The winning technique in 2010 used large-scale Support Vector Machine (SVM) training, while in 2011 the winning team employed a compressed Fisher vector approach. In 2012, the winning team achieved a top-five error rate of 0.16, substantially lower than its predecessors. That year, the winning University of Toronto team employed a convolutional neural network (CNN), and they trained the neural network model by using two GPUs, developing the most accurate classifier to date. They named their network configuration “AlexNet,” after the team’s lead student Alex Krizhevsky. AlexNet has had a large impact in the deep learning space; multiple deep-learning frameworks now include AlexNet as an example to aid neural-network users who are just getting started.

The University of Toronto’s success in 2012 demonstrated that a Deep Neural Network (DNN) can be effective at classifying many objects and that increasing the amount of training data can improve the DNN’s effectiveness. Successfully developing and training these networks required massive computation and was enabled by GPUs that exploit large-scale parallelism, allowing much faster training cycles than multi-core CPUs alone.

Since 2012, the use of GPUs for training neural networks has become widespread in the ILSVRC, as Figure 2 shows. In 2013 and 2014, teams from Google, Oxford University, National University of Singapore, University of Amsterdam Envision Technologies, and the startup Clarifai won various tasks in the ILSVRC competition using GPUs. For more information on the winners, visit the ILSVRC 2014 results page.

NVIDIA and IBM Cloud support ILSVRC 2015

For the past two years, NVIDIA has provided hardware resources to teams in need of GPUs. This year, NVIDIA is excited to announce we have teamed up with IBM Cloud to provide qualifying competitors with access to the SoftLayer cloud server infrastructure. The servers are outfitted with dual Intel Xeon E5-2690 CPUs, 128GB RAM, two 1TB SATA HDD/RAID 0, and two NVIDIA Tesla K80s, NVIDIA’s fastest dual-GPU accelerators. This offering is a big improvement over previous years, giving teams access to four GPUs, with 2496 CUDA cores and 12 GB of memory per GPU. This means that competitors can perform multiple training runs in parallel while they refine their neural networks, and they can employ multi-GPU training to get results as quickly as possible.

For the past two years, NVIDIA has provided hardware resources to teams in need of GPUs. This year, NVIDIA is excited to announce we have teamed up with IBM Cloud to provide qualifying competitors with access to the SoftLayer cloud server infrastructure. The servers are outfitted with dual Intel Xeon E5-2690 CPUs, 128GB RAM, two 1TB SATA HDD/RAID 0, and two NVIDIA Tesla K80s, NVIDIA’s fastest dual-GPU accelerators. This offering is a big improvement over previous years, giving teams access to four GPUs, with 2496 CUDA cores and 12 GB of memory per GPU. This means that competitors can perform multiple training runs in parallel while they refine their neural networks, and they can employ multi-GPU training to get results as quickly as possible.

Alex Krizhevsky et al. won the 2012 ILSVRC by using two NVIDIA GeForce GTX 580 GPUs to train a DNN for five to six days. The GTX 580 has only 3 GB of memory and 512 CUDA cores: significantly lower resources than the NVIDIA Tesla K80. In 2014, the Google team trained a 22-layer network within a week using a small number of high-end GPUs. In contrast, IBM Cloud’s SoftLayer servers are provisioned with two NVIDIA Tesla K80 GPUs. This configuration has enough computational power to help this year’s teams develop and train a winning DNN.

Alex Krizhevsky et al. won the 2012 ILSVRC by using two NVIDIA GeForce GTX 580 GPUs to train a DNN for five to six days. The GTX 580 has only 3 GB of memory and 512 CUDA cores: significantly lower resources than the NVIDIA Tesla K80. In 2014, the Google team trained a 22-layer network within a week using a small number of high-end GPUs. In contrast, IBM Cloud’s SoftLayer servers are provisioned with two NVIDIA Tesla K80 GPUs. This configuration has enough computational power to help this year’s teams develop and train a winning DNN.

IBM Cloud offers a variety of options for servers, storage, networking, and security management. Its management tools permit easy access and effective monitoring of hardware in use, and its SoftLayer infrastructure makes it easy to select and customize hardware to suit any teams’ specific needs, including tuning the number of processors, amount of RAM and disk space. This infrastructure can include NVIDIA’s highest performing server GPUs, the Tesla K80, at a monthly rate; allowing users to pay for GPU-accelerated compute resources only when needed.

Free Tesla K80 Servers in the Cloud for Your Team

To apply for complimentary access to IBM Cloud servers with NVIDIA Tesla K80s, just visit the ILSVRC 2015 homepage and register your team. ILSVRC teams who meet ImageNet’s qualifications will be given instructions for accessing the hardware once they have been accepted into the competition.

We are anxiously waiting to find out what the ILSVRC tasks will be this year, as well as which teams will return and what new entrants will join the growing competition.

Learn More about Deep Learning with GPUs

If you are new to the field of deep learning and would like to learn more about using GPUs to accelerate deep neural networks, be sure to register for NVIDIA’s new Deep Learning Courses. You can also read our previous blog posts on DIGITS and cuDNN. DIGITS is an easy-to-use, interactive, deep-learning training system that makes it easy to develop and train deep neural networks on GPUs. DIGITS can be easily deployed on a workstation or a cloud platform such as IBM Cloud’s SoftLayer infrastructure.