NVIDIA has made a lot of progress with CUDA over the past five years; we estimate that there are over 150,000 CUDA developers, and important science is being accomplished with the help of CUDA. But we have a long way to go to help everyone benefit from GPU computing. There are many programmers who can’t afford the time to learn and apply a parallel programming language. Others, many of them scientists and engineers working with huge existing code bases, can only make changes to their code that are portable across hardware and OS, and that benefit performance on multiple platforms.

NVIDIA has made a lot of progress with CUDA over the past five years; we estimate that there are over 150,000 CUDA developers, and important science is being accomplished with the help of CUDA. But we have a long way to go to help everyone benefit from GPU computing. There are many programmers who can’t afford the time to learn and apply a parallel programming language. Others, many of them scientists and engineers working with huge existing code bases, can only make changes to their code that are portable across hardware and OS, and that benefit performance on multiple platforms.

This class of developers needs a higher-level approach to GPU acceleration. They need something that is easy, powerful, portable, and open. This is the motivation behind OpenACC, an open standard defining a collection of compiler directives that specify loops and regions of code in standard C, C++, and Fortran to be offloaded from a host CPU to an attached parallel accelerator, such as an NVIDIA GPU. Directives are simple (typically a single line) hints provided to the compiler. If you are a C or C++ programmer, you are probably familiar with #pragma directives.

EASY: A MORSEL OF OPENACC

Here is a really simple example using OpenACC. This loop performs a SAXPY operation. SAXPY stands for Single-precision A times X Plus Y. A is a scalar value and X and Y are vectors, so this is a vector scale and add operation. Here is a simple SAXPY in C with an OpenACC directive to parallelize it.

void saxpy_parallel(int n,

float a,

float *x,

float *restrict y)

{

#pragma acc kernels

for (int i = 0; i < n; ++i)

y[i] = a*x[i] + y[i];

}

Here’s the equivalent in Fortran.

subroutine saxpy(x,y,n,a)

real :: a, x(:), y(:)

integer :: n, i

!$ acc kernels

do i = 1, n

y(i) = a*x(i)+y(i)

enddo

!$ acc end kernels

end subroutine saxpy

The #pragma line in the C program, and the !$acc lines in Fortran indicate that these are compiler directives: hints for the compiler. In this case, we are simply suggesting that this is a parallel loop, and that the compiler should attempt to generate parallel kernel code for an accelerator (in our case, a GPU). Also notice that we don’t have to do anything else to get this onto the GPU. In contrast to CUDA, we don’t have to allocate or initialize arrays on the device; we don’t have to copy the host (CPU) data to the accelerator (GPU) or copy the accelerator results back to the host after the loop; we don’t have to write a CUDA kernel to execute the body of the loop in parallel; and we don’t have to explicitly launch the kernel on the accelerator. The OpenACC compiler and runtime does all this work for us behind the scenes.

POWERFUL

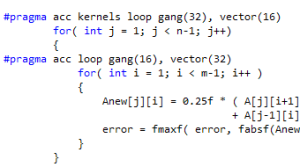

What is not shown in this simple example is that OpenACC gives you much more control than is demonstrated above. You can start simple as in the above examples, letting the compiler make decisions about accelerator data allocation and movement and how it parallelizes loops, but you can also take control and use directive clauses to be explicit about these things. In this way, OpenACC can be both easy and powerful. For loops with highly independent iterations, it can even approach CUDA performance.

I’ll get into more details in my next post.

PORTABLE

One of the (perhaps surprisingly) great things about compiler directives is that they can be ignored. This means that you can compile the code for a platform that does not support OpenACC and it will work, just as if you had never added the directives. That means that right out of the gate your code is portable between (for example) CPUs and GPUs with no specific work to make it so.

I’m not going to lie to you. Sometimes getting good speedups with OpenACC will require changes to the original code. For example, some data layouts result in access patterns that are not parallel-friendly. It is often wise to represent an array of structures (AoS) as a structure of arrays (SoA). This is not specific to GPUs: SoA usually benefits any parallel processor, including CPUs. This type of code change helps expose parallelism, and therefore it tends to have universal performance benefits. Combine those benefits with the portability of compiler directives, and you start to approach the elusive goal of “performance portability”.

OPEN: THE ORIGINS OF OPENACC

OpenACC was developed by The Portland Group (PGI), Cray, CAPS and NVIDIA. PGI, Cray, and CAPs have spent over 2 years developing and shipping commercial compilers that use directives to enable GPU acceleration as core technology. The small differences between their approaches allowed the formation of a group to standardize a single directives approach for accelerators and CPUs.

As of this writing, the OpenACC specification is in version 1.0, and there are publicly available implementations on the near horizon. You can get started today by using a compiler from one of the vendors listed above. In my next post, I’ll be using the PGI compiler. Today, PGI’s compiler implements a precursor to OpenACC called PGI Accelerator. If you are interested in trying OpenACC you can download a free trial of PGI Accelerator to try it out.

In my next post I’ll dive in with a more interesting code example to demonstrate how OpenACC directives can give you a 3-4x speedup with just a few lines of code.