High-performance stereo head-mounted display (HMD) rendering is a fundamental component of the virtual reality ecosystem. HMD rendering requires substantial graphics horsepower to deliver high-quality, high-resolution stereo rendering with a high frame rate.

Today, NVIDIA is releasing VR SLI for OpenGL via a new OpenGL extension called “GL_NVX_linked_gpu_multicast” that can be used to greatly improve the speed of HMD rendering. With this extension, it is possible to control multiple GPUs that are in an NVIDIA SLI group with a single OpenGL context to reduce overhead and improve frame rates.

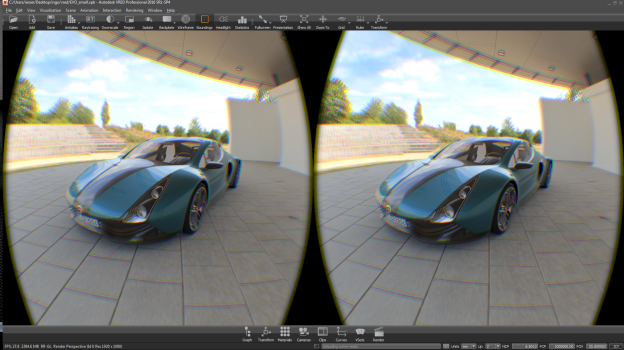

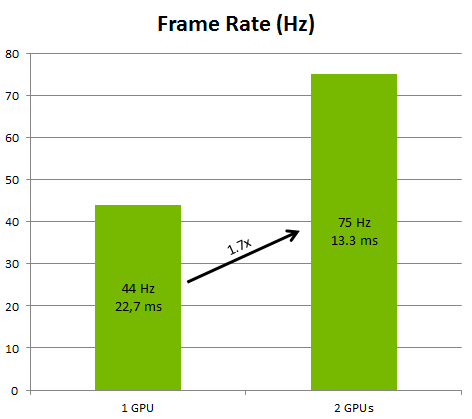

Autodesk VRED has successfully integrated NVIDIA’s new multicast extension into its stereo rendering code used for HMDs like the Oculus Rift and the HTC Vive, achieving a 1.7x speedup (see Figure 1). The multicast extension also helps Autodesk VRED with rendering for stereo displays or projectors, with nearly a 2x speedup.

The GL_NVX_linked_gpu_multicast extension is available in the NVIDIA GeForce and Quadro driver version 361.43 and newer. With the driver in the new multicast mode, all OpenGL operations that are not related to the extension are broadcast to all GPUs in the SLI group. Allocations are mirrored, data uploads are broadcast, and rendering commands are executed on all GPUs. This means that without the use of the special functionality in the new multicast extension, all GPUs are executing the same work on the same data.

For stereo rendering of a frame in VR, the GPU must render the same scene from two different eye positions. A normal application using only one GPU must render these two images sequentially, which means twice the CPU and GPU workload.

With the OpenGL multicast extension, it’s possible to upload the same scene to two different GPUs and render it from two different viewpoints with a single OpenGL rendering stream. This distributes the rendering workload across two GPUs and eliminates the CPU overhead of sending the rendering commands twice, providing a simple way to achieve substantial speedup (see Figure 2).

Code Samples

The following samples show the differences between a normal application doing sequential stereo rendering and an application doing parallel rendering using the new multicast extension. Code changes are minimized because all “normal” OpenGL operations are mirrored in multicast mode, so the application code for preparing the scene for rendering is unchanged.

The first sample shows how a standard application typically renders a stereo image. First, data is uploaded to the GPU containing data for the left eye (1.), and the first image is rendered (2.). Then, data for the right eye is uploaded (3.), followed by rendering the second image (4.). Calling the rendering function twice means that the OpenGL stream for rendering the image needs to be generated twice, causing twice the CPU overhead compared to the second sample.

// render into left and right texture sequentially // 1. set & upload left eye for the first image sceneData.view = /* left eye data */; glNamedBufferSubDataEXT( ..., &sceneData ); // use left texture as render target glFramebufferTexture2D( ..., colorTexLeft, ... ); // 2. render into left texture render( ... ); // 3. set & upload right eye for second image sceneData.view = /* right eye data */; glNamedBufferSubDataEXT( ..., &sceneData ); // use right texture as render target glFramebufferTexture2D( ..., colorTexRight, ... ); // 4. render into right texture render( ... );

The second sample shows how to generate the same set of textures using two GPUs. The data for the left and the right eyes are uploaded to GPU 0 and GPU 1, respectively (1.). Since the scene data is identical on both GPUs, the single render call (2.) that gets broadcast to both GPUs generates both the left and the right eye views, on GPUs 0 and 1, respectively, in the corresponding instance of the left texture. To work with both textures on one GPU afterwards, the right eye image must be copied from GPU 1 into GPU 0 (3.).

// render into left texture on both GPUs // copy left tex on GPU1 to right tex on GPU0 // 1. set & upload colors for both images sceneData.view = /* left eye data */; glLGPUNamedBufferSubDataNVX( GPUMASK_0, ..., &sceneData ); sceneData.view = /* right eye data */; glLGPUNamedBufferSubDataNVX( GPUMASK_1, ..., &sceneData ); // use left texture as render target glFramebufferTexture2D( ..., colorTexLeft, ... ); // 2. render into left texture render( ... ); // make sure colorTexRight is safe to write glLGPUInterlockNVX(); // 3. copy the left texture on GPU 1 to the right texture on GPU 0 glLGPUCopyImageSubDataNVX( 1, /* from GPU 1 */ GPUMASK_0, /* to GPU 0 */ colorTexLeft, GL_TEXTURE_2D, 0, 0, 0, 0, colorTexRight, GL_TEXTURE_2D, 0, 0, 0, 0, texWidth, texHeight, 1); // make sure colorTexRight is safe to read glLGPUInterlockNVX();

At the end of both of these code samples, texture 1 contains the left eye rendering of the scene and texture 2 contains the right eye rendering of the scene. The code after generating the images remains unmodified because both textures reside on the GPU that is used in non-multicast mode. As mentioned before, the simplicity of the extension requires only minimal code changes to modify an existing stereo application for multicast mode.

Try VR SLI Today!

The extension “GL_NVX_linked_gpu_multicast” is available starting with the new 361.43 driver, downloadable for both NVIDIA GeForce and Quadro GPUs. The DesignWorks VR and GameWorks VR SDK packages released in parallel with the driver contain more in-depth documentation, the extension text and a basic sample application that showcases the use of the extension. The sample that ships with the VR SDK is also available on GitHub.

We will also be releasing more code samples showing different applications of multicast on the nvpro-samples GitHub repository.