The NVIDIA SMP (simultaneous multi-projection) Assist NVAPI driver extension is a simple method for integrating Multi-Res Shading and Lens-Matched Shading into a VR application. It encapsulates a notable amount of state setup and API calls into a simplified API, thereby substantially reducing the complexity of integrating NVIDIA VRWorks into an application. Specifically, the SMP Assist driver handles creating and managing fast geometry shaders, viewport state, and scissor state in lieu of managing these states manually in the application.

Simplifying Multi-Resolution and Lens-Matched Shading

In VR headsets, users typically view the display through lenses which introduce a pincushion distortion. VR Applications render a barrel distorted image to reverse the pincushion distortion of the lens itself, resulting in a geometrically correct scene in the headset. The peripheral areas of a barrel distorted image are shrunk significantly. The NVIDIA VRWorks SDK includes features such as Multi-Resolution Shading and Lens Matched Shading, which allow application developers to take advantage of the non linearity in a barrel distorted image and reduce the pixel shading workload required to render a frame while maintaining visual quality.

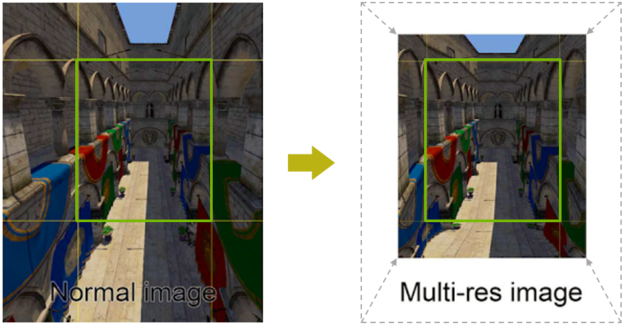

Multi-Resolution Shading (MRS), shown in figure 1, uses multiple viewports combined with scissor rectangles to create a multi-resolution projection. The resolution inside each viewport stays constant while the resolution varies across the different viewports. They can be arranged in such a way that the viewports together will represent one complex, seamless projection of the scene. The center viewport will typically have higher resolution than the peripheral viewports, thus better matching the barrel distorted image and saving unnecessary pixel shading workload.

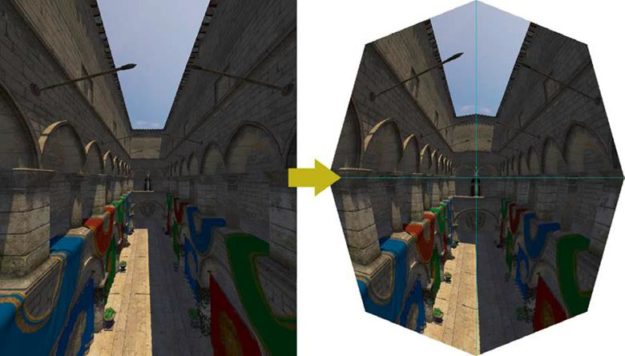

Lens-Matched Shading (LMS) uses multiple viewports combined with scissor rectangles and linear transformations to create a lens-matched projection, as shown in figure 2. In a lens-matched projection, the triangle vertices are transformed before they are rasterized by the following transformation:

w’ = w + Ax + By

Conceptually, this scales the “w” coordinate of the vertices in clip space before the perspective projection. The clip space “w” coordinate of the vertices may be offset as a function of “x” and “y” coordinates, which results in vertices getting “pulled” towards the center of the viewport, the larger their “x” and “y” coordinates are. Carefully selecting coefficients A and B for each of the four quadrants of the frame produces a warped image which resembles barrel distortion.

Each of these features requires the application to compute multiple viewports and scissors based on the desired effect. Additionally, for Lens Matched Shading, the application has to specify coefficients (A and B in the equation above). The application must ensure that the scene geometry renders correctly by setting a Fast Geometry Shader (FastGS) in the graphics pipeline. This shader culls triangles and broadcasts them to the correct viewport by computing the viewport mask.

The new NVIDIA SMP Assist API implements all these steps in the driver and exposes a simplified API to the application, enabling easier integration of MRS and LMS into an application or a game engine.

NVIDIA SMP Assist API

The SMP Assist API, currently available for DirectX11 applications, provides an interface for the application to specify the MRS/LMS configuration used by the VR application. Based on this configuration, the driver performs the following tasks internally:

- Compute viewports and scissors.

- Create a FastGS which culls and projects primitives into the correct viewport, matching constant buffer data required for shaders that need to transform between projected and unprojected coordinate spaces.

- Compute Lens Matched Shading coefficients, if applicable.

- The driver computes and maintains the above state for various rendering modes – Mono, Stereo, Instanced Stereo. (The computations for Stereo and Instanced stereo rendering modes assume that the application is rendering in a side-by-side configuration i.e. both eye views rendered on a single rendertarget).

The application can choose an assistance level based on the desired ease of implementation vs. flexibility tradeoff

- Full: The application specifies the configuration by selecting one from a list of pre-baked configurations which are fine-tuned for currently available VR HMDs

- Partial: The application specifies the configuration by providing the individual configuration parameters, which can be useful to support new or pre-production VR HMDs.

- Minimal: Application provides the viewports and scissors and the API handles the correct setting of the same while the application is responsible for setting a FastGS, GS constant buffer, and LMS coefficients.

Using SMP Assist is straightforward. The application calls into the API whenever the MRS/LMS projection configuration changes, which should happen once per frame at most. The application then enables SMP Assist for those parts of the frame that requires applying MRS/LMS projection. On subsequent draw calls, the driver selects and sets the appropriate version of the state described in 1, 2, and 3 above, thus overriding viewports and scissors set by the application. The driver reverts any state it overrode to the original state when disabling SMP Assist and the following draw calls use the state set via the regular DirectX API.

The API also provides functionality to get the internally computed data. This data could be useful for upscaling the image rendered via MRS/LMS.

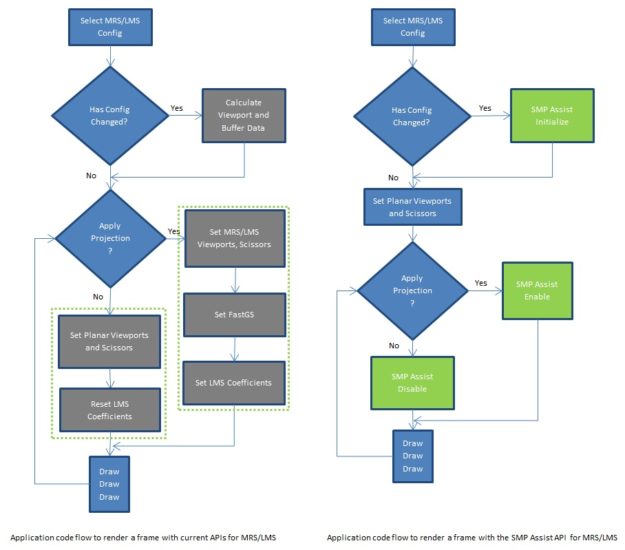

Figure 3 highlights the tasks currently performed by the application and internalized by the SMP Assist API:

Using the SMP Assist API

Initializing SMP Assist

You initialize the SMP Assist API by querying for platform support for the desired SMP feature (MRS/LMS), obtaining a pointer to the SMP Assist interface, and using this interface pointer to specify the desired configuration (rendertarget size, etc).

NV_QUERY_SMP_ASSIST_SUPPORT_PARAMS QuerySMPAssist = { 0 };

QuerySMPAssist.version=NV_QUERY_SMP_ASSIST_SUPPORT_PARAMS_VER;

QuerySMPAssist.eSMPAssistLevel=NV_SMP_ASSIST_LEVEL_FULL;

QuerySMPAssist.eSMPAssistType=NV_SMP_ASSIST_LMS;

NvStatus = NvAPI_D3D_QuerySMPAssistSupport(pD3DDevice, &QuerySMPAssist);

if (NvStatus != NVAPI_OK || QuerySMPAssist.bSMPAssistSupported == false)

{

// Query failed

}

NV_SMP_ASSIST_INITIALIZE_PARAMS initParams = { 0 };

ID3DNvSMPAssist*pD3DNvSMPAssist = NULL;

initParams.version = NV_SMP_ASSIST_INITIALIZE_PARAMS_VER;

initParams.eSMPAssistType = NV_SMP_ASSIST_LMS;

initParams.eSMPAssistLevel = NV_SMP_ASSIST_LEVEL_FULL;

initParams.flags = NV_SMP_ASSIST_FLAGS_DEFAULT;

initParams.ppD3DNvSMPAssist = &pD3DNvSMPAssist;

NvStatus = NvAPI_D3D_InitializeSMPAssist(pD3DDevice, &initParams);

if(NvStatus != NVAPI_OK || pD3DNvSMPAssist == NULL)

{

// NvAPI_D3D_InitializeSMPAssist failed

}

NV_SMP_ASSIST_SETUP_PARAMS setupParams = {0};

setupParams.version = NV_SMP_ASSIST_SETUP_PARAMS_VER;

setupParams.eLMSConfig = NV_LMS_CONFIG_OCULUSRIFT_CV1_BALANCED;

setupParams.resolutionScale = 1.0f;

setupParams.boundingBox.TopLeftX = 0;

setupParams.boundingBox.TopLeftY = 0;

setupParams.boundingBox.Width = rt_width;

setupParams.boundingBox.Height = rt_height;

setupParams.boundingBox.MinDepth = 0.0;

setupParams.boundingBox.MaxDepth = 1.0;

NvStatus = pD3DNvSMPAssist->SetupProjections(pD3DDevice, &setupParams);

The SMP Assist interface object returned by NvAPI_D3D_InitializeSMPAssist is a singleton. Any subsequent calls to NvAPI_D3D_InitializeSMPAssist return a pointer to the same object. The object is created by the driver in response to the first NvAPI_D3D_InitializeSMPAssist call by the application. The driver destroys the object when the application exits. The application should not attempt to destroy the object.

Rendering With SMP Assist

The application should indicate whether SMP feature has to be enabled/disabled for upcoming draw calls while rendering a frame. Accordingly, the API sets the render state (viewports, scissors, FastGS, LMS coefficients) which applies to all subsequent draw calls

NV_SMP_ASSIST_ENABLE_PARAMS enableParams = { NV_SMP_ASSIST_ENABLE_PARAMS_VER, NV_SMP_ASSIST_EYE_INDEX_MONO};

NV_SMP_ASSIST_DISABLE_PARAMS disableParams = { NV_SMP_ASSIST_DISABLE_PARAMS_VER};

while(…)

{

…

NvStatus = pD3DNvSMPAssist->Enable(pD3DContext, &enableParams);

Draw(); // Projection applied for all draw calls after Enable

Draw();

…

NvStatus = pD3DNvSMPAssist->Disable(pD3DContext, &disableParams);

Draw(); // Projection is not applied for any draw calls after Disable

}

Getting SMP Assist Data

Applications call ID3DNvSMPAssist::GetConstants to read the data computed by the API during initialization. This includes viewports/scissors for the specified VR rendering mode, FastGS constant buffer data, projection size, and constant buffer data that could be used for the flattening pass among other things.

NV_SMP_ASSIST_GET_CONSTANTS smpAssistConstants = { 0 };

D3D11_VIEWPORT viewports[NV_SMP_ASSIST_MAX_VIEWPORTS];

D3D11_RECT scissors[NV_SMP_ASSIST_MAX_VIEWPORTS];

memset(viewports, 0, NV_SMP_ASSIST_MAX_VIEWPORTS * sizeof(D3D11_VIEWPORT));

memset(scissors, 0, NV_SMP_ASSIST_MAX_VIEWPORTS * sizeof(D3D11_RECT));

NV_SMP_ASSIST_FASTGSCBDATA fastGSCBData = { 0 };

NV_SMP_ASSIST_REMAPCBDATA remapCBData = { 0 };

smpAssistConstants.version = NV_SMP_ASSIST_GET_CONSTANTS_VER;

smpAssistConstants.pViewports = viewports;

smpAssistConstants.pScissors = scissors;

smpAssistConstants.eEyeIndex = NV_SMP_ASSIST_EYE_INDEX_MONO;

smpAssistConstants.pFastGSCBData = &fastGSCBData;

smpAssistConstants.pRemapCBData = &remapCBData;

NvStatus = pD3DNvSMPAssist->GetConstants(&smpAssistConstants);

Instanced Stereo Considerations

Providing Eye Index

The FastGS set by the driver needs to know whether the current shader instance is rendering the left or the right eye when the application renders in instanced stereo mode.

The VR application must ensure world space shaders (Vertex/Hull/Domain) pass the current eye view (eyeIndex) correctly to the FastGS. The application should also create the last world space shader (Vertex/Domain) via NvAPI_D3D11_CreateVertexShaderEx / NvAPI_D3D11_CreateDomainShaderEx and indicate the eye index variable is a NvAPI_Packed_Eye_Index custom semantic. SMP Assist requires the eye index variable be a scalar.

NvAPI_D3D11_CREATE_VERTEX_SHADER_EX CreateVSExArgs = { 0 };

CreateVSExArgs.version = NVAPI_D3D11_CREATEVERTEXSHADEREX_VERSION;

CreateVSExArgs.UseWithFastGS = true;

CreateVSExArgs.UseSpecificShaderExt = false;

CreateVSExArgs.NumCustomSemantics = 1;

CreateVSExArgs.pCustomSemantics = (NV_CUSTOM_SEMANTIC*)malloc((sizeof(NV_CUSTOM_SEMANTIC))* CreateVSExArgs.NumCustomSemantics);

memset(CreateVSExArgs.pCustomSemantics, 0, (sizeof(NV_CUSTOM_SEMANTIC))* CreateVSExArgs.NumCustomSemantics);

CreateVSExArgs.pCustomSemantics[0].version = NV_CUSTOM_SEMANTIC_VERSION;

CreateVSExArgs.pCustomSemantics[0].NVCustomSemanticType = NV_PACKED_EYE_INDEX_SEMANTIC;

strcpy_s(&(CreateVSExArgs.pCustomSemantics[0].NVCustomSemanticNameString[0]), NVAPI_LONG_STRING_MAX, "EYE_INDEX");

NvStatus = NvAPI_D3D11_CreateVertexShaderEx(pD3DDevice, pVSBlob->GetBufferPointer(), pVSBlob->GetBufferSize(), NULL, &CreateVSExArgs, &pVertexShaderInstancedStereo);

Don’t forget the shader HLSL file:

struct VS_OUTPUT

{

…

uint eyeIndex : EYE_INDEX;

};

Set the LSB of eyeIndex to 0 if you want FastGS to render the current instance to the left eye. Otherwise, FastGS will render to the right eye.

Avoiding Shader Modification

Instanced stereo rendering involves shifting vertices according to the eye-view being rendered in the current shader instance. However, LMS requires that the application not perform such vertex shifting. This means the application might have to modify the vertex shader to ensure that the vertices are not shifted in case of LMS.

SMP Assist provides an API, UpdateInstancedStereoData, which can be used to undo shifting of vertices performed by the application’s shader code. The application need not modify the shader code for the LMS specific requirement described above. The application simply has to provide the correct coefficients to this API. These coefficients will be used to implement the equation to reverse the vertex shifting done by the application’s shader code.

Consider the following vertex shader snippet:

VSOutput main(

in Vertex i_vtx,

in uint i_instance : SV_InstanceID,

… )

{...

#if STEREO_MODE == STEREO_MODE_INSTANCED

uint eyeIndex = i_instance & 1;

output.eyeIndex = eyeIndex;

output.posClip = mul(pos, eyeIndex == 0 ? g_matWorldToClip : g_matWorldToClipR);

// Move geometry to the correct eye

output.posClip.x *= 0.5;

float eyeOffsetScale = eyeIndex == 0 ? -0.5 : 0.5;

output.posClip.x += eyeOffsetScale * output.posClip.w;

...

}

The equation to reverse the vertex shifting done in the shader above would be:

output.posClip.x = (output.posClip.x - eyeOffsetScale * output.posClip.w ) / 0.5 = 2.0 * output.posClip.x + (-eyeOffsetScale * 2.0) * output.posClip.w

Left Eye:

output.posClip.x = 2.0 * output.posClip.x + 1.0 * output.posClip.w = dotproduct( output.posClip, float4(2.0, 0.0, 0.0, 1.0))

Right Eye:

output.posClip.x = 2.0 * output.posClip.x - 1.0 * output.posClip.w = dotproduct(output.posClip, float4(2.0, 0.0, 0.0, -1.0))

The equations for the left/right eye view’s x component can be viewed as a dot product between vertex position and a vector of size 4.

The equation implemented by SMP Assist takes in 5 values per eye-view: 4 coefficients for the dot product with the vertex position and a 5th value to add to the result of the dot product.

NV_SMP_ASSIST_UPDATE_INSTANCEDSTEREO_DATA_PARAMS instancedStereoParams = { 0 };

instancedStereoParams.version = NV_SMP_ASSIST_UPDATE_INSTANCEDSTEREO_DATA_PARAMS_VER;

instancedStereoParams.eSMPAssistType = NV_SMP_ASSIST_LMS;

// Left eye: pos.x = dotproduct(pos, leftCoeffs) + leftConst

instancedStereoParams.leftCoeffs[0] = 2.0f;

instancedStereoParams.leftCoeffs[1] = 0.0f;

instancedStereoParams.leftCoeffs[2] = 0.0f;

instancedStereoParams.leftCoeffs[3] = 1.0f;

instancedStereoParams.leftConst = 0.0f;

// Right eye: pos.x = dotproduct(pos, rightCoeffs) + rightConst

instancedStereoParams.rightCoeffs[0] = 2.0f;

instancedStereoParams.rightCoeffs[1] = 0.0f;

instancedStereoParams.rightCoeffs[2] = 0.0f;

instancedStereoParams.rightCoeffs[3] = -1.0f;

instancedStereoParams.rightConst = 0.0f;

status = m_activeSMPAssist->UpdateInstancedStereoData(m_pDevice, &instancedStereoParams);

The application should call UpdateInstancedStereoData after SMP Assist is successfully initialized

Easier VR Programming

The SMP Assist API built into NVIDIA drivers enable developers to more easily implement multi-resolution shading and lens-matched shading, key features which make virtual reality more accessilble and user-friendly. If you’re not currently an NVIDIA developer and want to check out VRWorks, signing up is easy — just click on the “join” button at the top of the main NVIDIA Developer Page. SMP Assist API requires NVIDIA graphics driver version 397.31 and above. The latest VRWorks Graphics SDK 2.6 release includes a sample application and programming guide which walks through SMP Assist if you already have an NVIDIA Developer account.