The sheer scale of the smart city boggles the mind. Tens of billions of sensors will be deployed worldwide, used to make every street, highway, park, airport, parking lot, and building more efficient. This translates to better designs of our roadways to reduce congestion, stronger understanding of consumer behavior in retail environments, and the ability to quickly find lost children to keep our cities safe. Video represents one of the richest sensors used, generating massive streams of data which need analysis. NVIDIA DeepStream 2.0 enables developers to rapidly and simply create video analyics applications.

Humans currently process only a fraction of the captured video. Traditional methods are far less reliable than human interpretation. Intelligent video analytics solves this challenge by using deep learning to understand video with impressive accuracy in real time.

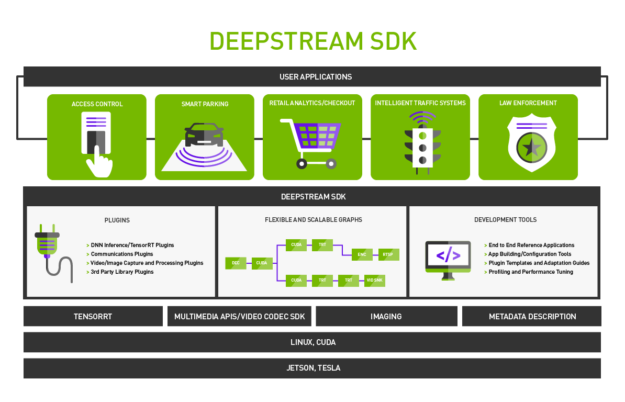

NVIDIA has released the DeepStream Software Development Kit (SDK) 2.0 for Tesla to address the most challenging smart city problems. DeepStream is a key part of the NVIDIA Metropolis platform. The technology enables developers to design and deploy scalable AI applications for intelligent video analytics (IVA). Close to 100 NVIDIA Metropolis partners are already providing products and applications that use deep learning on GPUs.

The DeepStream SDK 2.0 lets developers create flexible and scalable AI-based solutions for smart cities and video analytics. It includes TensorRT™ and CUDA® to incorporate the latest AI techniques and accelerate video analytics workloads.

DeepStream 2.0 gives developers tools such as:

- Parallel Multi-Stream Processing: Create high-stream density applications with deep learning and accelerated multimedia image processing to build solutions at scale.

- Heterogeneous Concurrent Neural Network Architecture: Leverage multiple neural networks to process each video stream, giving developers power and flexibility to bring different deep learning techniques for more intelligent insights.

- Configurable Processing Pipelines: Easily create a flexible and intuitive graph-based application, resulting in highly optimized pipelines delivering maximum throughput.

This release also includes reference plugins, applications, pretrained neural networks and a reference framework.

DeepStream SDK 2.0 Workflow

The DeepStream SDK is based on the open source GStreamer multimedia framework. The plugin architecture provides functionality such as video encode/decode, scaling, inferencing, and more. Plugins can be linked to create an application pipeline. Application developers can take advantage of the reference accelerated plugins for NVIDIA platforms provided as part of this SDK.

The DeepStream SDK includes a reference application, reference RESNET neural networks, and test streams. Table 1 shows a few of the plugins accelerated by NVIDIA hardware.

| Plugin Name | Functionality |

| gst-nvvideocodecs | Accelerated H265 & H264 video decoders |

| gst-nvstreammux | Stream muxer and batching. |

| gst-nvinfer | TensorRT based inference for detection & classification |

| gst-nvtracker | Reference KLT tracker implementation |

| gst-nvosd | API to draw boxes and text overlay |

| gst-tiler | Renders frames from multi-source into 2D array |

| gst-eglglessink | Accelerated X11 / EGL based renderer plugin |

| gst-nvvidconv | Scaling, format conversion, rotation. |

Table 1. NVIDIA-accelerated plugins

DeepStream SDK

The DeepStream SDK consists of a set of software building blocks, which layers between low-level APIs, such as TensorRT and Video Codec SDK, and the user application, shown in Figure 1.

DeepStream Application

A DeepStream application is a set of modular plugins connected in a graph. Each plugin represents a functional block like inference using TensorRT or multi-stream decode. Where applicable, plugins are accelerated using the underlying hardware to deliver maximum performance. Each plugin can be instantiated multiple times in the application as required.

Understanding Configuration Files

DeepStream includes a number of highly configurable plugins that enables a high degree of application customization. This enables developers to alter plugin parameters depending on their use case and application. Configuration files consist of simple text files that are included as part of the SDK. Let’s take a look at one example of a configuration file below:

DeepStream 2.0 reference config file: source4_720p_resnet_dec_infer_tracker_sgie_tiled_display_int8.txt.

The configuration file structure and parameters are defined in the DeepStream User Guide. The snippets below illustrate a few key parameters and their usage.

For example, to setup the reference application for performance measurements, configure the following parameters:

enable-perf-measurement=1 //To enable performance measurement perf-measurement-interval-sec=10 //Sampling interval in seconds for performance metrics flow-original-resolution=1 //Stream muxer flows original input frames in pipeline #gie-kitti-output-dir=/home/ubuntu/kitti_data/ // location of KITTI metadata files

DeepStream supports multiple input sources such as cameras and files. This configuration file allows you to select the input sources and then stream the video to the application.

Example Input Source Configuration:

[source0] enable=1 // Enables source0 input #Type - 1=CameraV4L2 2=URI 3=MultiURI //1) Input source can be USB Camera (V4L2) // 2)URI to the encoded stream. Can be a file,HTTP URI or an RTSP live source // 3) Select URL from multi-source input type=3 // Type of input source is selected uri=file://../../streams/sample_720p.mp4 // Actual path of the encoded source. num-sources=1 // Number of input sources. gpu-id=0 // GPU ID on which the pipeline runs within a single system

Data Flow

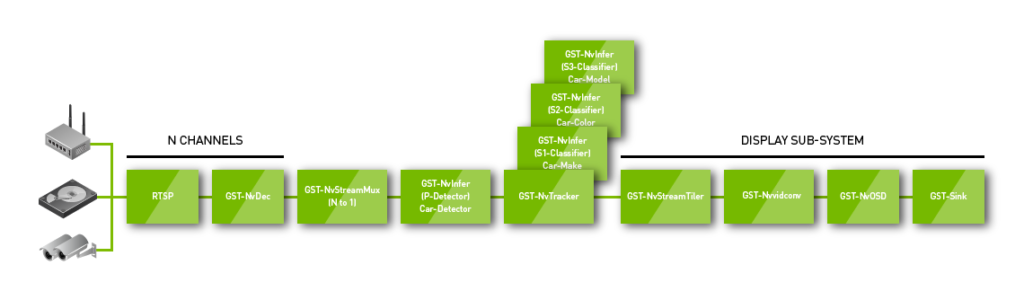

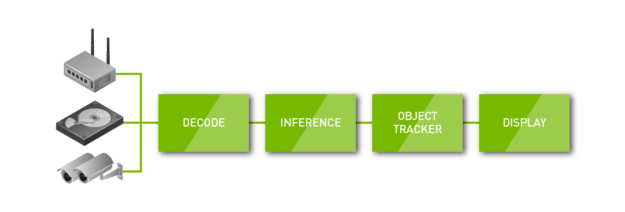

DeepStream ships with a reference application which demonstrates intelligent video analytics for multiple camera streams in real-time. The provided reference application accepts input from various types of sources like camera, RTSP streams, and disk. It can accept RAW or encoded video data from multiple sources simultaneously. The video aggregator plugin (nvstreammux) forms a batch of buffers from these input sources. The TensorRT based plugin (nvinfer) then detects primary objects in this batch of frames. The KLT based tracker element (nvtracker) generates unique ID for each object and tracks them.

The same nvinfer plugin works with secondary networks for secondary object detection or attribute classification of primary objects. See Figure 2 for the reference application.

The Tiler plugin (nvmultistreamtiler) composites this batch into a single frame as a 2D array. The DeepStream OSD plugin (nvosd) draws shaded boxes, rectangles, and text on the composited frame using the generated metadata as you can see in Figure 3.

Metadata contains information generated by all the plugins in the graph. Each plugin adds incremental information to the metadata. The NvDsMeta structure is used by all the components in the application to represent object related metadata. Refer to gstnvivameta_api.h and the associated documentation for DeepStream metadata specifications.

Building a Custom Plugin

The DeepStream SDK provides a template plugin that can be used for implementing custom libraries, algorithms, and neural networks in order to seamlessly achieve plug and play functionality with an application graph. The sources for this template are located in sources directory in gst-dsexample_sources.

Sample Plugin: gst-dsexample

As part of the template plugin, a static library dsexample_lib is provided for interfacing of custom IP. The library generates string labels to show the integration of a library output with DeepStream SDK metadata format. The library implements four functions:

- DsExampleCtx_Init -> Initialize the custom library

- DsExampleCtx_Deinit -> De-initialize the custom library

- DsExample_QueueInput -> Queue input frames / object crops for processing

- DsExample_DequeueOutput -> Dequeue processed output

The plugin does not generate any new buffers. It only adds / updates existing metadata, making it an in-place transform.

Other functions callable in the plugin include:

- start: Acquire resources, allocate memory, initialize example library.

- stop: De-initialize example library, free up resources and memory.

- set_caps: Get the capabilities of the video (i.e. resolution, color format, frame rate) that will be flowing through this element. Allocations / initializations that depend on input video format can be done here.

- transform_ip: Called when the upstream element pushes a buffer. Find the metadata of the primary detector, Use get_converted_mat to get the required buffer for pushing to library. Push the data to the example library. Pop example library output. Attach / update metadata using attach_metadata_full_frame or attach_metadata_object.

- get_converted_mat_dgpu: Scale/convert/crop the input buffer, either the full frame or the object based on its coordinates in primary detector metadata.

- free_iva_meta: Called by GStreamer framework when the metadata attached by this element should be destroyed.

- attach_metadata_full_frame: Shows how the plugin can attach its own metadata.

- attach_metadata_object: Shows how the plugin can update labels for objects detected by primary detector.

The application requires additional changes for parsing the configuration file related to the library and adding this new custom element to the pipeline. Refer to the following files in nvgstiva-app_sources for the changes required:

src/deepstream_dsexample.cincludes/deepstream_dsexample.csrc/deepstream_config_file_parser.csrc/deepstream_app.cincludes/deepstream_app.h

Enabling and Configuring the Sample Plugin

The pre-compiled deepstream-app binary already included functionality to parse the configuration and add the sample elements to the pipeline. Add the following section to an existing configuration file to enable and configure the plugin:

enable=1 //enable sample plugin gpu-id=0 //GPU id to be used in case of multiple GPUs processing-width=640 //operating image width for this plugin processing-height=480 //operating image height for this plugin full-frame=0 //Operate on individual bounding boxes/objects given by upstream component (for ex. Primary Model) unique-id=15 //Unique Id (should be >= 15) of plugin to identify its Meta data by application or other elements

Implementing Custom Logic Within the Sample Plugin

Implementing custom logic or algorithms within the plugin requires replacing the below function calls DsExampleCtx_Init, DsExampleCtx_Deinit, DsExample_QueueInput, DsExample_DequeueOutput with corresponding functions of any other custom library.

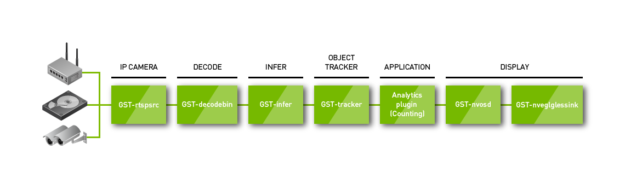

Build a Custom Application

The DeepStream SDK can be used to build custom applications for image and video analysis. The sections below show a simple approach for a people counting application, diagrammed in figure 4.

Consider an IP camera capturing a live feed to monitor people as they enter and exit a location. The encoded camera stream needs to be decoded to either H.264 or H.265 formats. The inferencing engine identifies people and wraps a bounding box around them once the stream has been decoded. These bounding boxes feed into an object tracker which generates a unique ID for each person. The resulting analytics can then be output to whatever display the developer needs as well as being stored for later use.

Figure 5 illustrates key elements from the DeepStream SDK which enables the complete application outlined above.

The example demonstrates how simple it is to connect the basic building blocks. Developers can map sets of GStreamer plugins from the DeepStream SDK to form a complete solution.

The way to access the metadata can be understood by referring the metadata API specs and the process_buffer function in the reference application.

DeepStream Performance

DeepStream represents a concurrent software system with the various plugins executing together while processing and passing frames through the pipeline. The performance of a DeepStream application depends on the execution characteristics of the individual plugins in conjunction with how efficiently they share the underlying hardware as they execute concurrently.

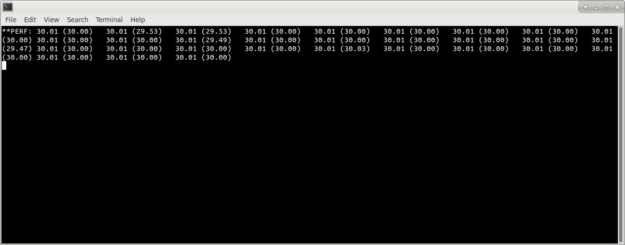

Frame throughput is the definitive indicator for whether the underlying hardware is able to support real-time execution of an application based on the input video and stream count. The reference application provided as part of the SDK illustrates the use of GStreamer probes functionality to periodically display the per-stream throughput to the console. Figure 6 illustrates the scenario without performance bottlenecks for applications processing input video at 30 fps.

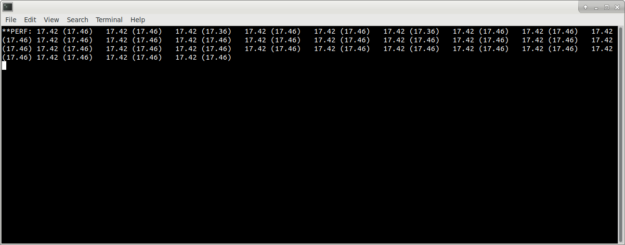

Note the slower-than-real time performance of the second case in figure 7 as indicated by throughput for the streams falling below the key 30fps metric based on the input video.

While throughput remains a handy metric to identify if an application is running into performance bottlenecks, hardware utilization helps us understand which entity within a system is the bottleneck. Hardware characteristics of the system that typically influence the performance of a DeepStream based application can be broken down into available memory size and bandwidth, the number of GPU cores, the GPU clock frequency, and the CPU configuration. Both device memory bandwidth and PCIe bandwidth for host-to-device memory transfers have performance impacts.

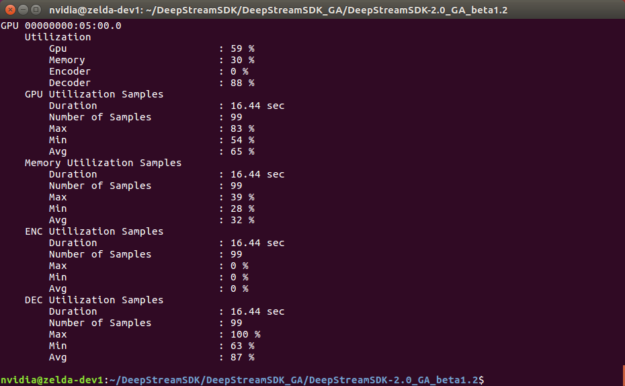

These factors determine the throughput for each of the plugins in the video pipeline and hence that of the overall pipeline. It’s common for DeepStream-based applications to be either decode-bound, inference- (compute-) bound, or CPU bound, depending on the complexity of the deep learning workloads. Using tools such as nvidia-smi for monitoring GPU utilization in combination with standard Linux utilities like TOP to monitor CPU utilization indicates which of the hardware decoders (NVDECs), the GPU cores, or the CPUs are showing a high degree of utilization and hence determine potential bottlenecks.

Use of throughput and utilization metrics provide a quick and effective means by which to establish execution performance of an application and also possible to identify hardware (plugin) bottlenecks. Using kernel-level profiling using tools such as NVIDIA Nsight and NVIDIA NVVP to perform kernel-level profiling helps break down the execution of a plugin and understand interleaving effects with other plugins. Such tools are particularly useful in establishing concurrency in execution of kernels underlying the plugins, which is key to maximizing the performance of the pipeline.

Reference Application Performance

The reference application provided with the SDK represents a complex use case comprising multi-stream input, cascaded networks and tracking functionality integrated into the pipeline. The application achieves real-time, 30fps throughput for 25 HD input streams when executed on a Tesla P4-based system.

System configuration used for performance measurement

- CPU – Intel® Xeon(R) CPU E5-2620 v4 @ 2.10GHz × 2

- GPU – Tesla P4

- System Memory – 256 GB DDR4, 2400MHz

- Ubuntu 16.04

- GPU Driver – 396.26

- CUDA – 9.2

- TensorRT – 4.0

- GPU clock frequency – 1113 MHz

Figure 8 illustrates the hardware utilization running on a configuration consisting of 25x 720p30 streams with the primary detector, tracker, three secondary classifiers, and tiled display.

Get DeepStream SDK 2.0 Today

The NVIDIA DeepStream SDK is ideal for developers looking to create AI-based solutions for video analytics applications at scale. Rather than designing an end-to-end solution from scratch, DeepStream comes with plug-ins, sample applications, and pre-trained deep learning model examples. This makes it the most effective way to develop and deploy advanced AI for complex video analytics. Get started today by downloading the DeepStream SDK 2.0.