AI and HPC software environments present complex and time consuming challenges to build, test, and maintain. The pace of innovation continues to accelerate, making it even more difficult to provide an up-to-date software environment for your user community, especially for Deep Learning.

With NGC, system admins can provide faster application access to users so that users can focus on advancing their science.

NGC Simplifies Application Deployments

NGC empowers researchers and scientists with performance-engineered containers featuring the latest AI and HPC software. These pre-integrated GPU-accelerated containers include all necessary dependencies such as CUDA runtime, NVIDIA libraries, communication libraries, and operating system. They have been tuned, tested, and certified by NVIDIA to run on GPU accelerated servers. This eliminates time consuming and difficult do-it-yourself software integration, and lets your users tackle challenges that were once thought to be impossible.

Using NGC Containers with Singularity

Since Docker is not typically available to users of shared systems, many centers rely on the Singularity container runtime. Singularity was developed to better satisfy the requirements of HPC users and system administrators, including the ability to run containers without superuser privileges.

NGC containers can be easily used with Singularity. Let’s use the NGC NAMD container to illustrate. NAMD is a parallel molecular dynamics application designed for high-performance simulation of large biomolecular systems, developed by the Theoretical and Computational Biophysics Group at the University of Illinois. Using Singularity’s built-in support for Docker container registries, we can download the image and save it as a local Singularity image file (SIF).

$ singularity build namd_2.13-multinode.sif docker://nvcr.io/hpc/namd:2.13-multinode

With the local Singularity image file in hand, you can now easily run NAMD on a GPU-accelerated cluster, also taking advantage of any available high performance network interconnect. The NGC NAMD container README has more information about how to run sample datasets and integrate with resource managers such as SLURM.

Local Replica of NGC Container Images

The Singularity container image we created in the previous section represents a point in time. However, software continually evolves, with new and updated NGC containers becoming available on a regular basis. For instance, NVIDIA updates NGC Deep Learning containers monthly to integrate the latest features and optimizations. It is also inefficient for each user to download and store their own otherwise identical copies of the NGC containers.

You can set up a local, shared library of NGC container images that automatically synchronizes with the master NGC registry to address this challenge. System admins can now provide access to the latest versions without any additional efforts and users can easily take advantage of the latest container images.

Introducing NGC Container Replicator

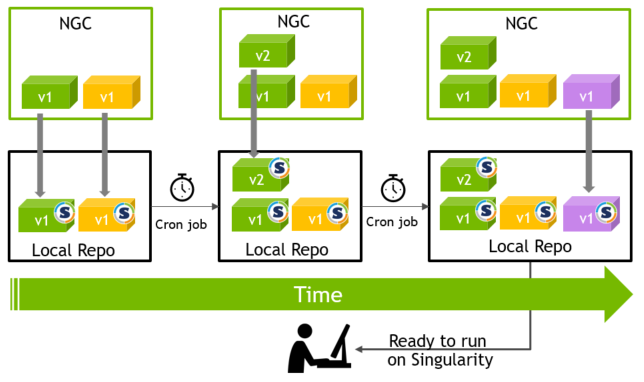

The NGC Container Replicator is an open source tool to create a local clone of some or all of the NGC container registry. The replicator can automatically convert NGC containers to Singularity images so you can easily setup a shared, local, and synchronized library of NGC containers for your Singularity-based user community, as shown in figure 1.

The easiest way to use this tool is via the deepops/replicator container image; you can also build the replicator yourself from the Github repository. The replicator will not re-download a container image if it already available in the local container library, but any new or updated container images published since the last time the replicator ran will download. You can setup the replicator to run as a daily or weekly cron job to keep your local Singularity container library automatically synchronized with NGC.

While the replicator deploys using Docker, you don’t need to make Docker accessible to your user community. You can run the replicator on an auxiliary node or even a virtual machine; only the container library directory needs to be accessible to both the replicator (read-write) and your user community (read-only).

For example, to download the NGC NAMD containers to a directory named /shared/containers:

$ sudo docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock -v /shared/containers:/output deepops/replicator --project=hpc --image=namd --singularity --no-exporter --api-key=<your NGC API key>

...

INFO - images to be fetched: defaultdict(<class 'dict'>,

{ 'hpc/namd': { '2.12-171025': { 'docker_id': '2017-11-21T04:08:37.561Z',

'registry': 'nvcr.io'},

'2.13-multinode': { 'docker_id': '2019-02-25T22:40:35.959Z',

'registry': 'nvcr.io'},

'2.13-singlenode': { 'docker_id': '2019-02-25T22:40:03.951Z',

'registry': 'nvcr.io'},

'2.13b2-multinode': { 'docker_id': '2018-11-10T23:00:19.258Z',

'registry': 'nvcr.io'},

'2.13b2-singlenode': { 'docker_id': '2018-11-10T23:04:25.710Z',

'registry': 'nvcr.io'}}})

INFO - Pulling hpc/namd:2.13-multinode

INFO - cloning nvcr.io/hpc/namd:2.13-multinode --> /output/nvcr.io_hpc_namd:2.13-multinode.sif

...

INFO - Pulling hpc/namd:2.13-singlenode

INFO - cloning nvcr.io/hpc/namd:2.13-singlenode --> /output/nvcr.io_hpc_namd:2.13-singlenode.sif

...

The --project=hpc and --image=namd options configure the replicator to only download the NAMD containers from the HPC section of NGC. Use multiple --image options to download additional HPC containers, or omit it entirely to download the entire NGC HPC application container catalog.

Specifying the --singularity option tells the replicator to generate Singularity image files. The --no-exporter option disables generating Docker container tar archives (useful to “sneakernet” NGC containers to a local Docker registry running on an air-gapped system).

You require an NGC API key to use the replicator. Obtain a NGC API key by creating a NGC account, going to the configuration tab, and clicking on “Get API key”. The API key is only required when using the replicator and does not need to be shared with your users nor is it necessary to otherwise download or run the container images.

AI containers from NGC can also be downloaded by the replicator. Many of these containers get updated monthly, with the earliest version dating back to 2017 in some cases. Only the most recent versions are likely relevant for your users. For example, to download the Tensorflow and PyTorch containers from 2019 and later:

$ sudo docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock -v /shared/containers:/output deepops/replicator --project=nvidia --image=tensorflow --image=pytorch --min-version=19.01 --singularity --no-exporter --api-key=a

Note that the deep learning containers currently support multi-GPU scaling within a node; multi-node support with Singularity will be added soon.

Summary

NGC containers enable your user community to easily take advantage of the latest GPU-accelerated software without dealing with the complexity of AI and HPC software environments. Setup the NGC Container Replicator to make it even easier by providing a local, shared library of Singularity images synchronized with NGC. Your users will thank you for making it possible to run the latest AI and HPC software just by changing the name of the local container image they are using.