Bare-metal installations of HPC applications on a shared system require system administrators to build environment modules for 100s of applications which is complicated, high maintenance, and time consuming. Furthermore, upgrading an application to the latest revision requires carefully updating the environment modules.

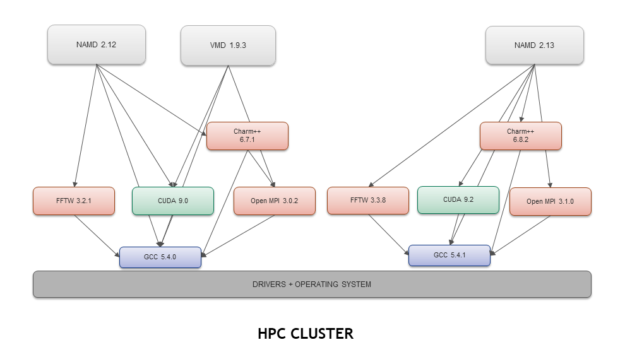

Networks of dependencies often break during new installs while upgrades unintentionally crash other applications on the system. In the example shown in figure 1, let’s assume that the system has the NAMD 2.12 molecular dynamics software and VMD 1.9.3 to handle visualization. To upgrade NAMD to 2.13, the system admin will have to update various dependencies including CHARM++ to v. 6.8.2. However, upgrading the CHARM++ library from 6.7.1 will break VMD’s dependency network and the app will crash.

Containers Simplify Application Deployments

Containers greatly improve reproducibility and the ease of use while also eliminating the time-consuming and error-prone bare-metal application installations, delivering simple application deployments in HPC.

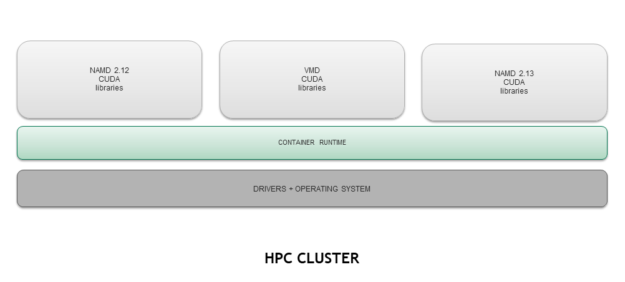

Containers make life simple for both the system administrator and the end users. Containers basically contain the application and all the dependencies required to launch an application as figure 2 shows. Let’s walk through the same scenario again but this time with containers. The user follows three simple steps to upgrade NAMD:

- Pull the latest image from the container hub

- Run the container

- Deploy the latest version of NAMD

Reproducibility is very important in HPC but it is hard to achieve with bare metal because the libraries on different systems are very likely different. Since applications inside a container always use the same environment, the performance is reproducible and portable.

NVIDIA offers containers in its NVIDIA GPU Cloud (NGC) registry of Docker images which enable faster access to GPU-accelerated computing applications. HPC users can use Docker format containers very simply. Let’s look at how you can jump on the container bandwagon.

Myth: Docker Containers Can’t be Used in HPC

Docker is one of the most popular container technologies deployed for microservices, heavily used in enterprise and the cloud applications. However, the Docker runtime has low adoption in the HPC world because it requires users to have root access to run Docker and execute a containerized application. HPC system admins consider this as a big security flaw. Furthermore, the Docker runtime does not easily support MPI which makes the adoption challenging in high performance computing for compute-heavy workloads.

Meanwhile, Singularity, Shifter, CharlieCloud, and a few other container runtimes have been developed to meet HPC needs including security and MPI. This has enabled rapid adoption of containers at national labs and universities.

A misconception exists that Docker images can only run with Docker runtime and hence can’t be used in HPC. The Singularity runtime addresses the two major gaps, security and MPI, allowing HPC developers to adopt containers. In addition, the Singularity runtime is designed to load and run Docker format containers, making Singularity one of the most popular container runtimes for HPC.

HPC Containers from NVIDIA GPU Cloud

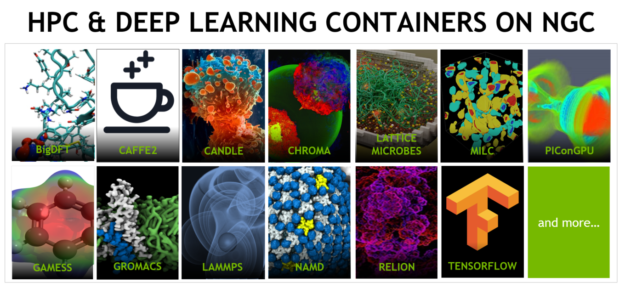

NVIDIA GPU Cloud (NGC) offers a container registry of Docker images with over 35 HPC, HPC visualization, deep learning, and data analytics containers optimized for GPUs and delivering accelerated performance (figure 3). The registry includes some of the most popular applications including GROMACS, NAMD, ParaView, VMD, and TensorFlow.

The HPC application containers get updated for the latest revisions and the deep learning framework containers receive monthly updates to deliver maximum performance on NVIDIA GPUs. Let’s take a look at these Docker-based containers and show how you can run the HPC containers in Singularity.

See the full list of containers and try it on your Pascal/Volta-powered workstation, HPC cluster, or in the cloud.

Run Docker container images in Singularity

Let’s take a look at one example. The MILC package is part of a set of code written by the MIMD Lattice Computation (MILC) collaboration used to study quantum chromodynamics (QCD), the theory of the strong interactions of subatomic physics. Using MILC, let’s learn how to convert the NGC Docker images to Singularity format and run in the Singularity (v2.5.0+) runtime. Running other HPC containers follows a similar process and the detailed steps are included in the respective READMEs.

Accessing the registry

Start by creating a free account on NGC. Once you’ve created an account and have logged in, you’ll be able to generate the NGC API key required to pull all NGC containers, including the MILC container used in this example.

You can find more information describing how to obtain and use your NGC API key here.

Setting NGC credentials

Before running with singularity you must set the NGC container registry authentication credentials.

This is most easily accomplished by setting the following environment variables:

$ export SINGULARITY_DOCKER_USERNAME='$oauthtoken' $ export SINGULARITY_DOCKER_PASSWORD=<NVIDIA NGC Cloud Services API key>

Pull the container

Now that you’ve set up your credentials you’ll need to create a few directories required by the MILC example you’ll be running:

mkdir data run

Pulling the MILC Docker image from NGC, converting it to a Singularity image, and opening an interactive shell within the container can be accomplished with a single command. This command mounts the host directories created above into the container, allowing for application I/O:

singularity shell --nv -B $(pwd)/data:/data -B $(pwd)/run:/sc15_cluster docker://nvcr.io/hpc/milc:cuda9-ubuntu1604-quda0.8-mpi3.0.0-patch4Oct2017-sm60

You now have an interactive shell within the NGC MILC container. Note that the container is still Docker based but Singularity is used to execute the container.

Run MILC

You can launch the example from within this interactive shell, replacing {GPU_COUNT} with the integer number of GPUs available on your system:

Singularity> /workspace/examples/sc15_cluster.sh {GPU_COUNT}

Running containers on a multi-node systems is a complex process and out of scope for this post. The process will be covered in an upcoming article.

Performance

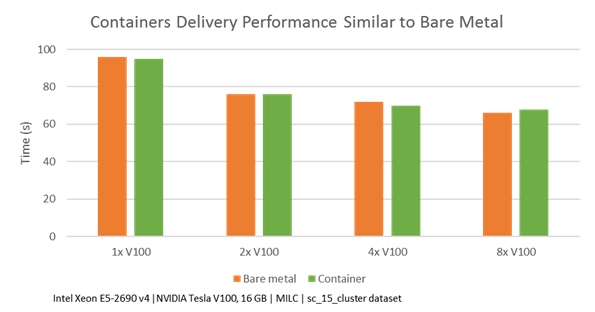

Running applications from containers has little effect on performance. As the chart shows, performance on containerized applications offers essentially equivalent performance.

Simplify HPC Application Deployment

NGC containers deliver optimized performance and access to the latest application features using the latest versions of HPC applications without requesting an upgrade to the version that’s currently installed on the system.

These containers are tested on various systems including GPU-powered workstations, NVIDIA DGX systems, and on NVIDIA GPU-supported cloud service providers, including AWS, GCP and Oracle Cloud Infrastructure, for a smooth user experience.

Pull containers from NGC today to experience how easy it is to deploy an application in an HPC environment and make scientific breakthroughs faster.