“Ray tracing is the future, and it always will be!” has been the tongue-in-cheek phrase used by graphics developers for decades when asked whether real-time ray tracing will ever be feasible.

Everyone seems to agree on the first part: ray tracing is the future. That’s because ray tracing is the only technology we know of that enables the rendering of truly photorealistic images. It’s no coincidence that every offline renderer used in the movie industry, where compromises on image quality are unacceptable, is based on ray tracing. Rasterization has made immense strides over the years, and it is still evolving even today. But it is also fundamentally limited in the type of effects it can compute. Truly taking graphics to the next level requires new underlying technology. This is where ray tracing comes in, and this is why real-time ray tracing has long been the dream of gamers and game developers.

So will ray tracing always remain a dream of the future, and never arrive in the present? At GDC 2018, NVIDIA unveiled RTX, a high-performance implementation that will power all ray tracing APIs supported by NVIDIA on Volta and future GPUs. At the same event, Microsoft announced the integration of ray tracing as a first-class citizen into their industry standard DirectX API.

Putting these two technologies together forms such a powerful combination that we can confidently answer the above question: the future is here! This is not a hyperbole: leading game studios are developing upcoming titles using RTX through DirectX — today. Ray tracing in games is no longer a pipe dream. It’s happening, and it will usher in a new era of real-time graphics.

Unreal Engine real-time ray tracing demo running on NVIDIA RTX

Diving in: a peek into the DirectX Raytracing API

The API that Microsoft announced, DirectX Raytracing (DXR), is a natural extension of DirectX 12. It fully integrates ray tracing into DirectX, and makes it a companion (as opposed to a replacement) to rasterization and compute.

API Overview

The DXR API focuses on delivering high performance by giving the application signficant low-level control, as with earlier versions of DirectX 12. Several design decisions reflect this:

- All ray tracing related GPU work is dispatched via command lists and queues that the application schedules. Ray tracing therefore integrates tightly with other work such as rasterization or compute, and can be enqueued efficiently by a multithreaded application.

- Ray tracing shaders are dispatched as grids of work items, similar to compute shaders. This lets the implementation utilize the massive parallel processing throughput of GPUs and perform low-level scheduling of work items as appropriate for the given hardware.

- The application retains the responsibility of explicitly synchronizing GPU work and resources where necessary, as it does with rasterization and compute. This allows developers to optimize for the maximum amount of overlap between ray tracing, rasterization, compute work, and memory transfers.

- Ray tracing and other dispatch types share all resources such as textures, buffers, and constants. No conversion, duplication, or mapping is required to access a resource from ray tracing shaders.

- Resources that hold ray tracing specific data, such as acceleration structures and shader tables (see below), are entirely managed by the application. No memory allocations or transfers happen implicitly “under the hood”.

- Shader compilation is explicit and therefore under full application control. Shaders can be compiled individually or in batch. Compilation can be parallelized across multiple CPU threads if desired.

At a high level, DXR introduces three new concepts to DirectX that the application must manage:

- Ray Tracing Pipeline State Objects contain the compiled shader code that gets executed during a ray tracing dispatch.

- Acceleration Structures contain the data structures used to accelerate ray tracing itself, i.e. the search for intersections between rays and scene geometry.

- Shader Tables define the relationship between ray tracing shaders, their resources (textures, constants, etc), and scene geometry.

Let’s take a closer look at these.

The Ray Tracing Pipeline

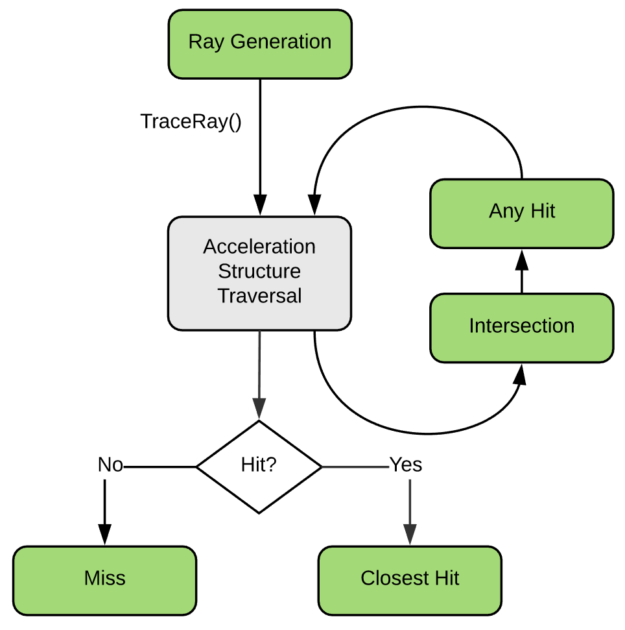

The traditional raster graphics pipeline defines a number of shader types: vertex shader, geometry shader, pixel shader, etc. Analog to that model, a ray tracing pipeline consists of five new shader types which are executed at different stages:

- The ray generation shader is the first thing invoked in a ray tracing dispatch. Ray generation shaders are comparable to compute shaders, with the added capability of calling the new HLSL function TraceRay(). This function casts a single ray into the scene to search for intersections, triggering other shaders in the process. A ray generation shader may call TraceRay() as many times as it likes.

- Intersection and any hit shaders are invoked whenever TraceRay() finds a potential intersection between the ray and the scene. The intersection shader determines whether the ray intersects an individual geometric primitive — for example a sphere, a subdivision surface, or really any primitive type you can code up! The most common type is, of course, triangles, for which the API offers special support through a built-in, highly tuned intersection shader. Once an intersection is found, the any hit shader may be used to process it further or potentially discard it. Any hit shaders commonly implement alpha testing by performing a texture lookup and deciding based on the texel’s value whether or not to discard an intersection.

- Once TraceRay() has completed the search for ray-scene intersections, either a closest hit or a miss shader is invoked, depending on the outcome of the search. The closest hit shader is typically where most shading operations take place: material evaluation, texture lookups, and so on. The miss shader can be used to implement environment lookups, for example. Both closest hit and miss shaders can recursively trace rays by calling TraceRay() themselves.

The pipeline constructed from these shaders defines a single-ray programming model. Semantically, each GPU thread handles one ray at a time and cannot communicate with other threads or see other rays currently being processed. This keeps things simple for developers writing shaders, while allowing room for vendor-specific optimizations under the hood of the API.

The main way for the different shader types to communicate with each other is the ray payload. The payload is simply a user-defined struct that’s passed as an inout parameter to TraceRay(). Any hit, closest hit, and miss shaders can read and write the payload, and therefore pass back the result of their computations to the caller of TraceRay().

Examples

To get a feel for what this looks like in practice, let’s consider some simple examples:

Basic ray generation shader that traces a primary ray

// An example payload struct. We can define and use as many different ones as we like.

struct Payload

{

float4 color;

float hitDistance;

};

// The acceleration structure we'll trace against.

// This represents the geometry of our scene.

RaytracingAccelerationStructure scene : register(t5);

[shader("raygeneration")]

void RayGenMain()

{

// Get the location within the dispatched 2D grid of work items

// (often maps to pixels, so this could represent a pixel coordinate).

uint2 launchIndex = DispatchRaysIndex();

// Define a ray, consisting of origin, direction, and the t-interval

// we're interested in.

RayDesc ray;

ray.Origin = SceneConstants.cameraPosition.

ray.Direction = computeRayDirection( launchIndex ); // assume this function exists

ray.TMin = 0;

ray.TMax = 100000;

Payload payload;

// Trace the ray using the payload type we've defined.

// Shaders that are triggered by this must operate on the same payload type.

TraceRay( scene, 0 /*flags*/, 0xFF /*mask*/, 0 /*hit group offset*/,

1 /*hit group index multiplier*/, 0 /*miss shader index*/, ray, payload );

outputTexture[launchIndex.xy] = payload.color;

}

The above code shows the simple case of primary rays being traced, i.e. rays are sent into the scene originating from a virtual camera. Of course, ray generation shaders are by no means limited to this. Basing ray generation on rasterized g-buffer data (e.g. to trace reflections) is another common use case. This is often cheap, because many engines generate a g-buffer anyway. It’s also a great example of how ray tracing can be used to complement rasterization, rather than replace it.

Closest hit shader that visualizes barycentrics

// Attributes contain hit information and are filled in by the intersection shader.

// For the built-in triangle intersection shader, the attributes always consist of

// the barycentric coordinates of the hit point.

struct Attributes

{

float2 barys;

};

[shader("closesthit")]

void ClosestHitMain( inout Payload payload, in Attributes attr )

{

// Read the intersection attributes and write a result into the payload.

payload.color = float4( attr.barys.x, attr.barys.y,

1 - attr.barys.x - attr.barys.y, 1 );

// Demonstrate one of the new HLSL intrinsics: query distance along current ray

payload.hitDistance = RayTCurrent();

}

State Objects

When using traditional rasterization, only the shaders required by the current object being drawn have to be active on the GPU. Therefore, rasterization pipeline objects are relatively small, containing a single set of vertex shader, pixel shader, etc. This is an important difference compared to ray tracing: we’re given the power to arbitrarily shoot rays into the scene, which means they could hit anything! Therefore, all shaders for all objects that could potentially be hit must be resident on the GPU and ready for execution.

The mechanism that groups shaders together for execution is called state objects in DXR. At a high level, a ray tracing pipeline state object can be seen as a binary executable resulting from a link step across all the shaders compiled for a scene. The relationship between different shaders is specified at state object creation. For example, triplets of intersection, any hit, and closest hit shaders are bundled into hit groups. The application specifies the pipeline state object to be executed when it calls DispatchRays() on a command list. An application can create any number of pipeline state objects it wants, and it can re-use precompiled shaders to do so.

Acceleration Structures

Ray tracing requires spatial search data structures to efficiently compute intersections of rays with scene geometry. The application builds these data structures explicitly using the new command list method BuildRaytracingAccelerationStructure(). NVIDIA RTX contains carefully optimized construction algorithms that produce high quality results at blazing speed, making it possible to build and update these structures in real time. An application can further optimize acceleration structures for different types of content, such as static vs. animated.

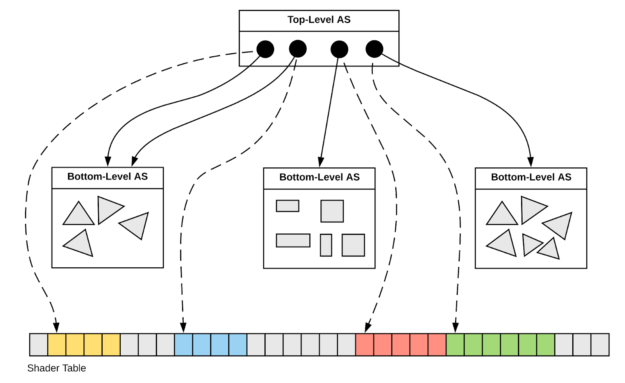

All the geometry in a scene is represented by two levels of acceleration structures. Bottom-level acceleration structures are built from geometric primitives, such as triangles. The input primitives for these builds are specified through one or more geometry descriptors. A geometry descriptor includes a vertex and an index buffer, making its granularity roughly comparable to a draw call in rasterization.

Top-level acceleration structures in turn are built from references to bottom-level structures. We call these references instance descriptors. Each instance descriptor also includes a transformation matrix to position it in the scene, and an offset into the shader table to locate material information (see below). Notice that in our ray generation shader example earlier, the ‘scene’ parameter to TraceRay() was a top-level acceleration structure — it represents the “entry point” of the intersection search.

The two-level hierarchy defined by top and bottom level enables efficient rigid-body animation and instancing. Top-level acceleration structures are usually small enough to be constructed at very little cost.

Shader Tables

So far we’ve discussed ray tracing pipelines, which specify the shaders that exist in a scene, and acceleration structures, which specify the geometry. The shader table is the data structure that ties the two together. In other words, it defines which shader is associated with which object in the scene. In addition, it holds information about the resources accessed by each shader, such as textures, buffers, and constants.

At a high level, a shader table is simply a chunk of GPU memory entirely managed by the application. The application is responsible for allocating the resource, filling it with valid data, transferring it to the GPU, and correctly synchronizing it with ray tracing dispatches – just like any other GPU memory resource. The application is also free to maintain multiple shader tables, and, for example, multi-buffer them to update one copy while using another for rendering.

Layout

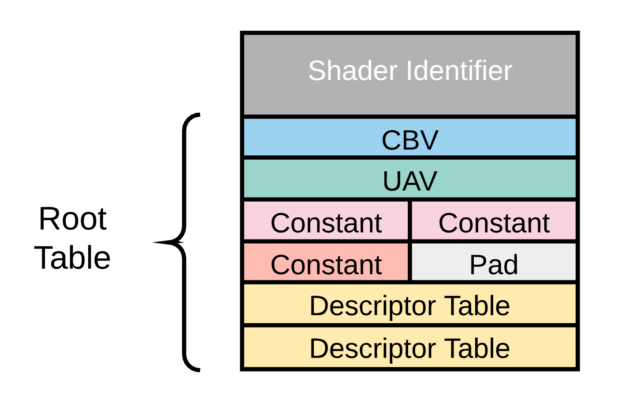

A shader table is an array of equal-sized records. Each record associates a shader (or a hit group) with a set of resources. Usually there exists one record per geometry object in the scene, so shader tables often have thousands of entries.

A record begins with an opaque shader identifier, which the application obtains by querying it from a compiled shader, followed by a root table containing the shader’s resources. The layout of the root table is defined by the shader’s local root signature. As usual, a root signature can contain any combination of constants, descriptor tables, and root descriptors. With ray tracing however, the application directly accesses the root table memory (rather than using “setter” methods), which allows for very efficient updates. Since it’s “just memory”, a shader table can even be updated from a GPU shader!

Indexing

Recall that we talked about shader table offsets when building top-level acceleration structures from instance descriptors? This should now start making sense. The system uses these offsets to locate the correct shader table record whenever TraceRay() finds an intersection. It can then bind the resources defined in the record and execute the appropriate intersection, any hit, or closest hit shader for the intersected geometry.

Further Reading

This concludes our quick tour through the DirectX Raytracing API. In the space of a blog post, we could really only scratch the surface. As a next step, you may want to start browsing some sample code, or dive in all the way and digest Microsoft’s API specification. You can begin with the Microsoft blog post about DXR. The company’s DXR developer support forum has transitioned to the DirectX Discord channel. More information regarding NVIDIA’s RTX ray-tracing technology can be found at the NVIDIA GameWorks Ray Tracing page.

Setting up for development

In order to get started with developing DirectX Raytracing applications accelerated by RTX, you’ll need the following:

- NVIDIA Volta GPU

- NVIDIA driver version 396 or higher

- Windows 10 RS4

- Microsoft’s DXR developer package, consisting of DXR-enabled D3D runtimes, HLSL compiler, and headers

- A complete set of denoisers will be available in the upcoming release of GameWorks for Ray Tracing

To complete the setup, we also recommend NVIDIA NSight Graphics, which was announced at GDC 2018 alongside RTX and comes with first-class DXR support.

Note that DXR is an experimental feature of DirectX as of Windows 10 RS4, and is targeted at developers only. This means that developer mode must be enabled in Windows in order to run DXR applications. (Settings → Update & Security → For developers).

Tracing a Path to the Future

Check out NVIDIA’s DXR tutorials on Github, which include sample code and full documentation. You’ll also be able to explore DXR using NVIDIA’s rendering prototyping framework, Falcor, with an upcoming update.

DXR represents a key step towards the broad use of ray tracing. Building the capability into DirectX will enable a wider swath of developers to experiment with a technology previously the purview of high-end content creation applications. Today DXR remains a developer feature but as more graphics programmers experiment with ray tracing, the likelihood of DXR achieving mainstream status will increase. Companies including Epic, Remedy, and Electronic Arts have already begun experimenting with adding real-time raytracing capabilities to their game engines.

EA Project PICA real-time ray tracing demo running on NVIDIA RTX