The Institute for Cyber-Enabled Research (iCER) at Michigan State University recently unveiled a new $3 million supercomputer named Laconia with double the speed of its previous supercomputer. Partnering with Lenovo as the system OEM, powered by 200 NVIDIA Tesla K80 GPUs, Laconia ranks in the TOP500 fastest computers in the world and is projected to be in the top 6% most energy efficient supercomputers.

The new supercomputer will be used by Michigan State University researchers for a variety of projects, from running molecular simulations for new drug designs to developing technology for human recognition.

To the Point: A Spartan Tribute

iCER named Laconia after a region of Greece that was home to the original Spartans, the mascot of Michigan State University. It’s also the origin of the English word laconic, which means concise or succinct in speech. iCER thought this was fitting because when you’re working hard, you don’t have time for idle chat. People who have been described as laconic include Socrates, Abraham Lincoln and Albert Einstein: pretty good company!

High Performance in High Demand at MSU

Michigan State University (MSU) is a Research 1 university with a burgeoning computational sciences focus, which has greatly increased demand on its existing computational resources. Researchers at MSU tackle biological, chemical materials, engineering, digital humanities, etc. types of problems buttressing the need for general computational resources. The new machine doubles MSU’s existing capabilities and on the first days was running at 99% utilization, demonstrating the high level of demand for computing at MSU.

The new machine cost nearly $3M with funds coming from university and federal research funds as appropriate. In the end, a large portion of the machine will be sold in a buy-in program making it largely supported by the user community. The machine is part of the Institute of Cyber-enabled Research (iCER) which has a staff of about 20 people that will support the machine via system administration or via support of the researchers themselves.

Laconia has 320 nodes in seven racks, each equipped with two Intel Xeon E5-2680v4 2.4GHz 14-core CPUs, 128 gigabytes of RAM. The cluster also has 50 Lenovo NeXtScale nx360 server nodes with four NVIDIA K80 GPUs each, for a total of 200 dual-GPU accelerators in Laconia. Users have access to 240 GB of solid-state disk local scratch storage and the nodes are connected with InfiniBand EDR/FDRnetworking.

The machine delivers 535.9 teraFLOP/s of performance (Linpack Rmax) and it ranked #205 of the TOP500 supercomputer list (announced on June 20th 2016 at ISC2016). It is also expected to rank #30 on the Green500 list.

Applications of the Laconia Supercomputer

Laconia will be used mostly by local researchers at MSU. The following sections detail some example uses.

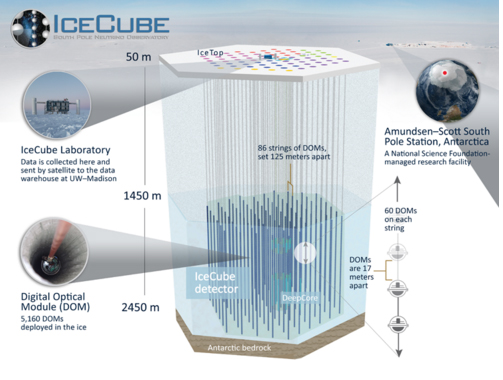

Using GPUs to Detect Neutrinos at IceCube Neutrino Laboratory in Antarctica

Tyce DeYoung, Associate Professor in the Michigan State University Department of Physics and Astronomy, is deputy spokesperson for the IceCube Collaboration, an interdisciplinary project touching on both astrophysics and fundamental particle physics.

On the astrophysical side, it has been known for over a century that the Earth’s atmosphere is constantly bombarded by cosmic rays: protons and atomic nuclei coming from somewhere out in space. We still don’t know their sources, but these cosmic ray particles are somehow accelerated to energies more than ten million times higher than the world’s most powerful particle accelerator—the Large Hadron Collider in Geneva—can manage. Researchers expect that neutrinos detectable by IceCube should also be produced in the sources of the cosmic rays, whatever they are, and they hope the neutrinos IceCube detects will help identify these sources and probe the physics taking place in those environments, which are far more powerful than anything that can be replicated here on Earth.

IceCube is also trying to understand neutrinos themselves better. Neutrinos are fundamental particles, like electrons or quarks, but their properties are very unlike anything else we know. They are more than one million times less massive than any other particle—it’s unknown exactly how much lighter they are because nobody has yet been able to measure a mass that small. They don’t interact with other matter via either electromagnetic or strong nuclear forces, which is why they’ve been called “ghost particles”—most of them will happily pass right through a planet without interacting with it at all. We don’t understand why neutrinos have such odd properties, or whether they might be related to other things we can’t explain, such as why the universe is made of matter rather than antimatter, why all the particles we know about come in triplets, and how fundamental forces like electromagnetism and gravity are related.

The fundamental challenge in neutrino physics is detecting enough of them. They usually are passing through matter without interacting with it, and this includes the IceCube’s detectors. Huge detectors are needed to detect the rare neutrinos that do interact with other matter. IceCube takes that to the limit by monitoring a cubic kilometer of the Antarctic ice cap—one billion tons of ultrapure ice—to watch for neutrinos interacting.

IceCube is designed to watch as much ice as possible with the 5,100 sensors it has available, which means that the sensors are spaced tens or hundreds of meters apart. That means IceCube doesn’t record very much information about any single event, so the project has to make the absolute best use of every bit of data recorded. IceCube must model every one of the tens of millions of photons emitted during a neutrino event since it doesn’t know which hundred or thousand of them it might detect. This modeling must be done very quickly, since there are several thousand other particles passing through the detector every second, or about one million times more than the neutrinos of interest. This isn’t done in real-time, but it gives an idea of the scale of the problem.

Michigan State University is one of 48 U.S. institutions participating in IceCube, and its science relies heavily on GPU-accelerated software running on high-performance computing clusters at MSU and around the world. IceCube’s photon tracking software is all GPU-accelerated; making it almost two orders of magnitude faster than it would be running on CPUs. Using GPUs is the only way researchers have been able to do the tracking directly, rather than resorting to numerical approximations that would degrade ability to understand the detected neutrino events.

IceCube also uses GPUs to model the passage of low-energy neutrinos through the Earth, where some rather complicated quantum mechanical phase effects can cause the neutrinos to change type (called the neutrinos’ “flavor”). Measuring the details of these flavor changes can provide information about neutrino properties like the differences between their masses, in a way that’s analogous to using a tuning fork to measure how far out of tune a piano string is. This is another significant computational challenge; the code runs about 75 times faster on GPUs than it does on CPUs.

In 2013, the IceCube Collaboration announced the discovery of a flux of very high-energy neutrinos, presumably associated with the sources of high-energy cosmic rays. Researchers are currently working on identifying the specific sources of these neutrinos. They have ruled out one class of objects—exploding giant stars—that many people had thought produced the highest energy cosmic rays. The data is also starting to disfavor another popular theory—supermassive black holes at the centers of galaxies—but the jury’s still out on that one.

IceCube is also making progress studying neutrino properties, with a recent paper submission reporting that they don’t see evidence of the long-conjectured “sterile” neutrinos, and the MSU group hopes it will have another major paper measuring some parameters related to neutrino masses and flavor oscillations later this year.

According to Professor DeYoung, “This research will give us a bit more about the universe we live in — and historically, the better we have understood our universe, the more able we have been to provide a comfortable and healthy life for ourselves. Ben Franklin didn’t envision electric lights, much less computers, when he was flying his kite, and Albert Einstein wasn’t thinking about fiber-optic communications when we was working out the quantum mechanics underlying lasers. I suspect that almost every element of modern technology can be traced back to this sort of purely curiosity-based inquiry eventually. A hundred years from now, what we learn from research like this may play a key role in some sort of technology we can’t even imagine today.”

Using GPUs in Biometrics: Developing the Technology for Human Recognition

Automated human recognition through distinctive anatomical and behavioral traits is a growing area of research called biometrics. This technology can be used to reduce identity theft, improve homeland security, identify suspects, and prevent financial fraud.

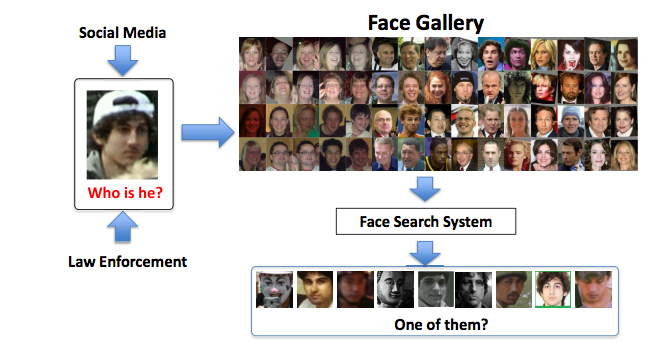

Emerging applications in face and fingerprint recognition, such as supporting identification tasks involving tens of millions of identities (homeland security, national ID, law enforcement applications) and more difficult capture scenarios (surveillance imagery) have motivated the application of deep learning methods. The success of deep learning methods in general has been driven by the availability of large training datasets, and the computational power afforded by modern GPUs.

University Distinguished Professor Anil Jain in the Michigan State University Department of Computer Science & Engineering, along with his Ph.D. student, Charles Otto, uses deep learning methods for developing face representations for large-scale search and clustering applications. Using the GPUs at MSU’s High Performance Computing Center, a 10-convolutional layer network with millions of parameters can be trained in just two days, resulting in an effective representation for challenging face recognition applications. In a face search experiment involving photos of the Tsarnaev brothers, convicted of the Boston Marathon bombing, the proposed cascade face search system could find the photo of Dzhokhar Tsarnaev, the younger brother, at rank-1 in one second on a 5-million-image gallery and at rank-8 in seven seconds on an 80-million-image gallery.

Using GPUs to Improve Drug Efficiency

From anti-cancer therapeutics to novel antibiotics, the world is in constant need of new drugs to treat common and rare diseases. These drugs can dramatically improve the quality of life around the globe. A key piece of the design process is a physical model of the drug binding to its intended target. This model allows researchers to predict how making small changes to the drug can improve its potency and selectivity.

But the targets, usually proteins, are not static. Each atom in the system is in constant motion, and these motions occur on a broad range of timescales ranging from femtoseconds (bond vibrations) to hours (drug residence times). Bridging this gap with molecular simulation cannot be done in a straightforward way. It requires new computational approaches like the ones being developed in the research group led by Alex Dickson, Assistant Professor in the Michigan State University Department of Computational Mathematics, Science and Engineering; Department of Biochemistry & Molecular Biology.

GPUs have changed the game by significantly lowering the cost of molecular simulation. Microsecond timescale simulations are now routine, and moderately sized GPU clusters can collect simulation data over hundreds of microseconds by running in parallel, which can be synthesized into a single statistical model that describes drug binding and release. With the sampling methods they are developing, Dickson’s group aims to push this even further, to simulate unbinding transitions that occur on extremely rare time scales on the molecular level but are vitally relevant to drug design.

Dickson expects that as methods continue to develop, simulations of drug binding will become widely used both in academia and the pharmaceutical industry, contributing to the rational design of new drug therapies. These simulations also open up a whole new world of dynamic structural information that can be used to identify new binding sites that can be targeted with new molecules. According to Processor Dickson, “We are only beginning to understand the physics behind the binding of proteins to small molecules, which has roots that are billions of years old, and has a profound impact on the future of our species.”