Our Spotlight is on Dr. Ren Wu, a distinguished scientist at Baidu’s Institute of Deep Learning (IDL).

Our Spotlight is on Dr. Ren Wu, a distinguished scientist at Baidu’s Institute of Deep Learning (IDL).

He is known for his pioneering research in using GPUs to accelerate big data analytics and his contribution to large-scale clustering algorithms via the GPU. Ren was a speaker at GTC14 and was originally featured as a CUDA Spotlight in 2011 when he worked at HP Labs.

[Editor’s note: On May 16, Baidu announced the hiring of Dr. Andrew Ng to lead Baidu’s Silicon Valley Research Lab.]

______________________________________________________________________________

NVIDIA: Ren, why is GPU computing important to your work?

Ren: A key factor in the progress we are making with deep learning is that we now have much greater computing resources in our hands.

Today one or two workstations with a few GPUs has the same computing power as the fastest supercomputer in the world 15 years ago, thanks to GPU computing and NVIDIA’s vision.

One of the critical steps for any machine learning algorithm is a step called “feature extraction,” which simply means figuring out a way to describe the data using a few metrics, or “features.”

Because only a few of these features are used to describe the data, they need to represent the most meaningful metrics.

These features have traditionally been hand crafted by experts. What’s changed in the past few years is that researchers have discovered that by training large, deep neural networks with a vast set of training data, neurons emerge that look for the most salient features as a result of this computationally-intense training process.

The most surprising thing is that this method of extracting features by the brute force of massive training data actually outperforms the approach of using hand-crafted features, which has been a major paradigm shift for the community.

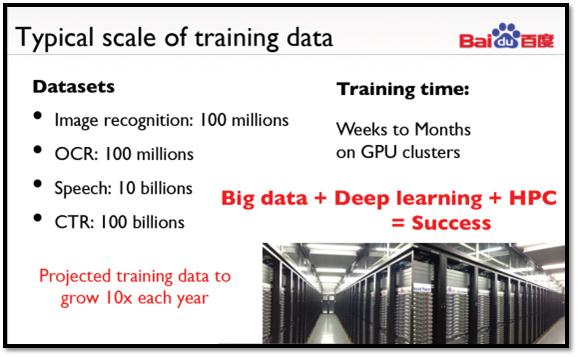

Of course, the computational cost of training on such large training sets naturally leads people to use GPUs.

I am focused on bringing in even more computing power and designing new deep learning algorithms to better utilize the computing power. We are expecting to see higher levels of intelligence and even a new kind of intelligence. We are pushing the envelope.

NVIDIA: Which CUDA features and libraries do you use?

Ren: We are currently using CUDA 5 and testing CUDA 6. CUDA as a programming model has become very mature over the years and is perfect for training deep neural networks, especially for large models.

We use many advanced features offered by CUDA as well as the libraries that come with it. For example, the cuBLAS library from NVIDIA is key for deep-learning based applications, since many algorithms can be mapped into dense matrix manipulation very nicely. We also use the Thrust template library, especially when we do fast prototyping. Of course we have also written our own CUDA code when necessary.

NVIDIA: What challenges are you tackling?

Ren: We are very good at designing parallel algorithms that take full advantage of the GPU hardware features. Still, we would like to reduce the training time by 10x, or be able to train 10x bigger models. We are working hard to keep up with our own ambitions.

NVIDIA: Tell us more about Baidu’s work in mobile image classification and recognition.

Ren: Typically, a deep neural network model, once it has been trained, will be deployed in data centers (or clouds), and the user’s requests will then be sent to the cloud, processed and returned to the user. The dog breed recognition example that Jen-Hsun showed in his GTC keynote is using this approach, based on research performed at Facebook.

At Baidu, we are taking this approach a ‘giant step’ further. We have trained similar deep neural network models to recognize general classes of objects, as well as different types of objects (for example, flowers, handbags and, of course, dogs).

The difference is that, in addition to being deployed in our data centers, our models can also be running inside my cell phone, and everything can be done in place without sending and retrieving data from the cloud.

The ability to run models in place without connectivity is very important in many scenarios — for example, when you travel to foreign countries or are in a remote place, where you need help but may not be connected.

Furthermore, the deep neural network engine works directly on video stream, offering a true ‘point and know’ experience on your cell phone.

NVIDIA: How will machine learning affect the average consumer’s life?

Ren: As I said earlier, many deep-learning powered products from Baidu are already online. These products are greatly improving the user experience of searching and acquiring information. In my GTC14 talk I highlighted and demonstrated our vision of information that is available on anything, at any place, at any time.

NVIDIA: What are you looking forward to in the next five years, in terms of technology advances?

Ren: I look forward to seeing the full potential of deep learning. On the one hand, much larger models will be trained using much larger datasets on much bigger computers. On the other hand, these trained deep neural networks will then be deployed in many different forms, from servers at data centers, to tablets and smart phones, to wearable devices and the Internet of the Things.

Read more GPU Computing Spotlights.