Turing GPUs introduce a new shading capability called Texture Space Shading (TSS), where shading values are dynamically computed and stored in a texture as texels in a texture space. Later, pixels are texture mapped, where pixels in screen-space are mapped into texture space, and the corresponding texels are sampled and filtered using a standard texture lookup operation. With this technology we can sample visibility and appearance at completely independent rates, and in separate (decoupled) coordinate systems. Using TSS, a developer can simultaneously improve quality and performance by (re)using shading computations done in a decoupled shading space.

Developers can use TSS to exploit both spatial and temporal rendering redundancy. By decoupling shading from the screen-space pixel grid, TSS can achieve a high-level of frame-to- frame stability, because shading locations do not move between one frame and the next. This temporal stability is important to applications like VR that require greatly improved image quality, free of aliasing artifacts and temporal shimmer.

TSS has intrinsic multi-resolution flexibility, inherited from texture mapping’s MIP-map hierarchy, or image pyramid. When shading for a pixel, the developer can adjust the mapping into texture space, which MIP level (level of detail) is selected, and consequently exert fine control over shading rate. Because texels at low levels of detail are larger, they cover larger parts of an object and possibly multiple pixels.

TSS remembers which texels have been shaded and only shades those that have been newly requested. Texels shaded and recorded can be reused to service other shade requests in the same frame, in an adjacent scene, or in a subsequent frame. By controlling the shading rate and reusing previously shaded texels, a developer can manage frame rendering times, and stay within the fixed time budget of applications like VR and AR. Developers can use the same mechanisms to lower shading rate for phenomena that are known to be low frequency, like fog. The usefulness of remembering shading results extends to vertex and compute shaders, and general computations. The TSS infrastructure can be used to remember and reuse the results of any complex computation.

The Mechanics of TSS

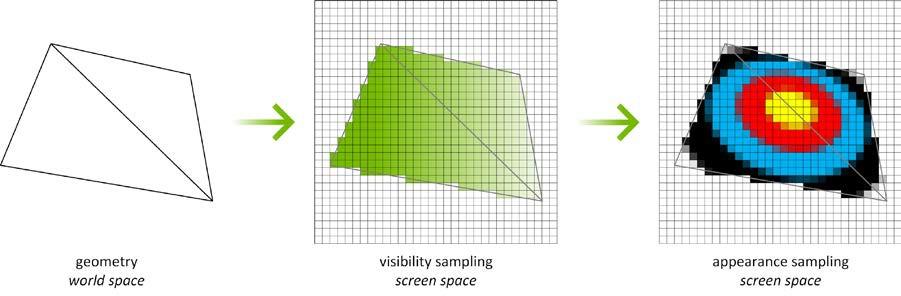

Figure 1 illustrates the traditional rasterization and shading process. A 3D scene is rasterized and converted to pixels in screen space. The pixels are tested for visibility, shaded for appearance, and depth-tested. The operations all take place on the same screen-space pixel grid, on the same pixel.

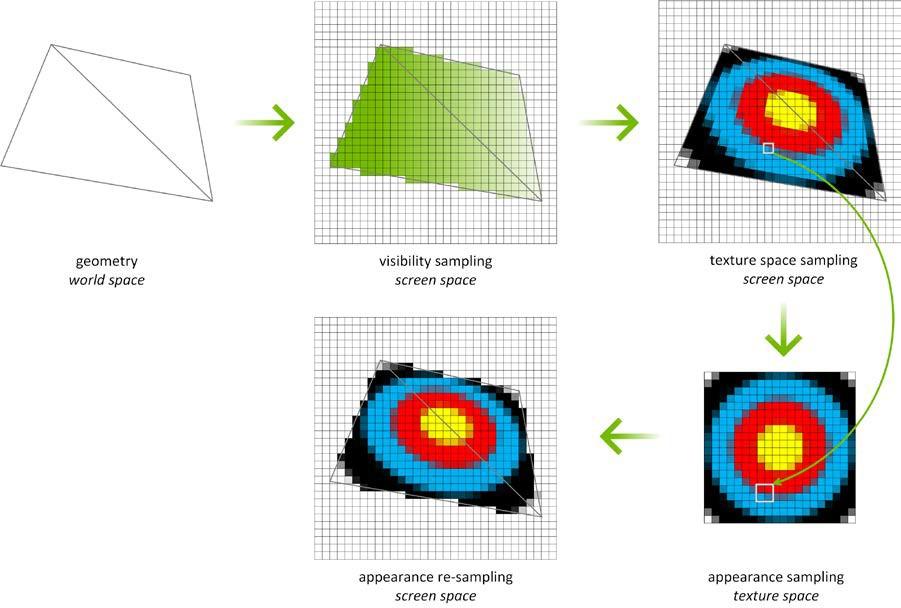

The two major operations of visibility sampling (rasterization and z-testing) and appearance sampling (shading) can be decoupled with TSS and performed at a different rate, on a different sampling grid, or even on a different timeline. The shading process is no longer tied directly to screen-space pixels, instead happening in texture space. In Figure 2, the geometry is still rasterized to produce screen-space pixels, and the visibility test still takes place in screen-space. However, instead of shading in screen-space, texels are found that are required to cover an output pixel.

In other words, the footprint of the screen-space pixel is mapped into a separate texture space and shade the associated texels in texture space. The mapping to texture space is a standard texture mapping operation with the same control over the LOD and things like anisotropic filtering. To produce the final screen-space pixels we sample from the shaded texture. The texture is created on-demand based on sample requests, only generating values for texels that are referenced.

One example use case for TSS is improving the efficiency of VR rendering. Figure 3 shows an example use case for TSS in VR rendering. In VR, a stereo pair of images is rendered, with almost all of the elements visible in the left eye also showing up in the right eye view. With TSS, we can shade the full left-eye view, and then render the right eye view by sampling from the completed left-eye view. The right eye view only has to shade new texels in the case that no valid sample was found (for example a background object that was obscured from view from the left-eye perspective but is visible to the right eye).

As mentioned, with TSS, per-pixel shading rate can be dynamically and continuously controlled by adjusting texture LOD. By varying LOD we can select different texture MIP levels as needed to reduce the number of texels shaded. Note that this means that the sampling approach of TSS can also be used to implement many of the same shading rate reduction techniques that are supported by Turing’s Variable Rate Shading feature (VRS). (We’ll have more details on VRS in a later post). Which method is best for the developer depends on their objectives. VRS is a lighter weight change to the rendering pipeline, while TSS has more flexibility and supports additional use cases.

Find Out More

The Turing GPU represents the most advanced GPU on the planet, and offers a host of new graphics, compute, and AI features. You can find out more about NVIDIA’s latest Turing GPU in the Turing architecture post. Mesh shaders represent another example of Turing’s advanced shader technology, introduced here. You can learn more about RTX and DirectX 12 ray tracing in this post. This blog post is an extract from the Turing Architecture White Paper. Download the full version if you want even more details on the Turing GPU and its feature set.

You can also view an in-depth video about Texture Space Shading given at the 2018 SIGGRAPH conference by Andre Tatarinov and Rahul Sathe, below.