Virtual reality displays continue to evolve and now include advanced configurations such as canted HMDs with non-coplanar displays. Other headsets offer ultra-wide fields-of-view as well as other novel configurations. NVIDIA Turing GPUs incorporate a new feature called Multi-View Rendering (MVR) which expands upon Single Pass Stereo, increasing the number of projection views for a single rendering pass from two to four. All four of the views available in a single pass are now position-independent and can shift along any axis in the projective space. By rendering four projection centers, Multi-View Rendering can power canted HMDs (non-coplanar displays) enabling extremely wide fields of view and novel display configurations.

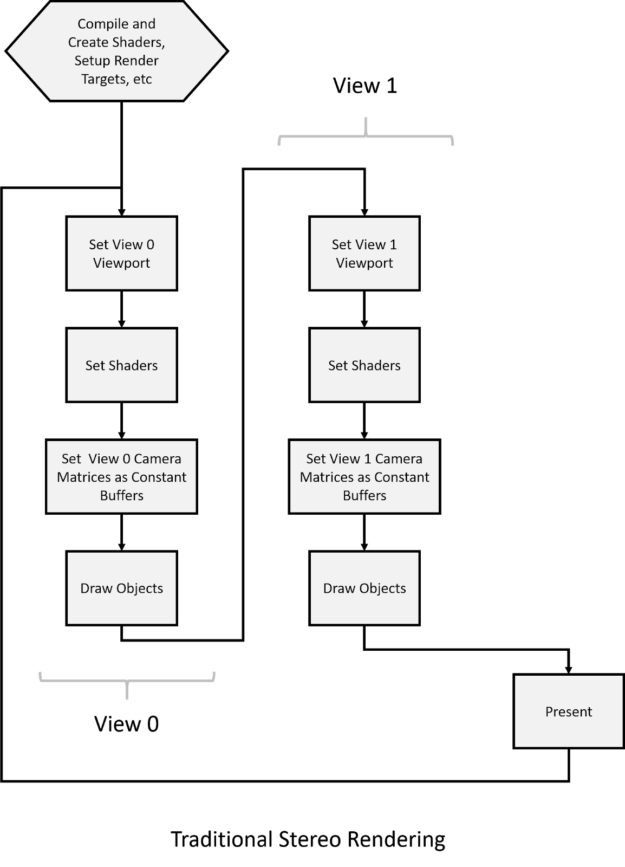

Mono vs Stereo Rendering

When a graphics application renders a 3D scene for a desktop monitor, it creates a virtual camera in the 3D space and performs computations on the geometry in the scene based on the camera position. The rendering engine then performs pixel shading and projects the single monoscopic frame on the display.

The graphics pipeline with Virtual Reality (VR) is fundamentally different, since multiple views need to be rendered. A typical VR headset has two lenses. Each lens projects a separate view onto left and right eyes of the viewer. This means that the 3D application needs to perform stereoscopic rendering — two views of the same scene rendered from slightly offset camera positions.

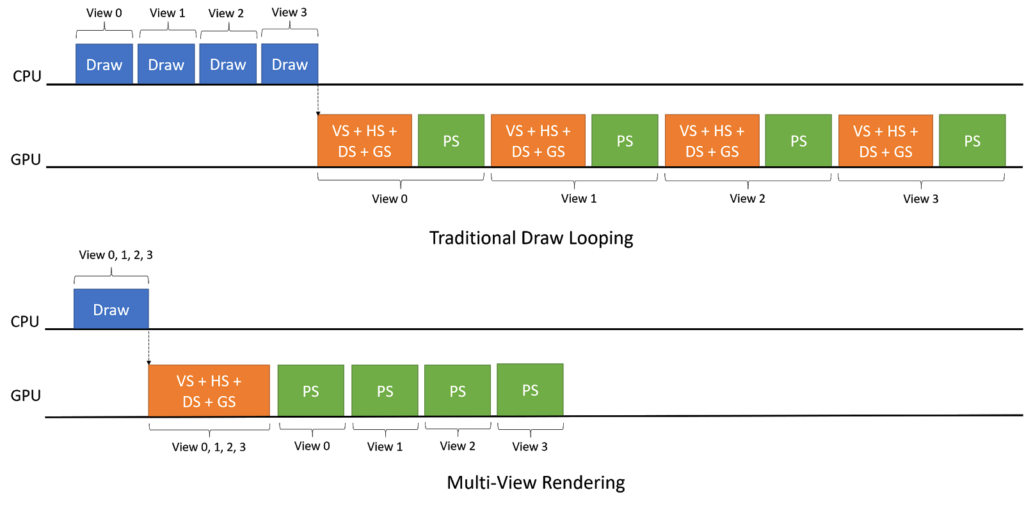

A common approach of rendering in stereo simply performs the two draw operations together in sequence before presenting the images to the headset. This brute-force approach leaves a lot of performance on the table since so much of the geometry and computation is duplicated across the two viewports. Since VR requires 90 frames per second (FPS) for smooth immersive experiences, VR rendering already demands much more performance than desktop rendering. Thus, any redundant and wasted CPU/GPU cycles only compound this challenge.

Developers add more complex and detailed geometry in order to build more interesting and immersive experiences. The triangle count per frame has climbed from thousands up to tens of millions of triangles in recent years. While the increased geometric complexity improves realism and immersion, it taxes the GPU and makes the goal of meeting the 90 FPS performance bar more difficult. Although games try to optimize the geometry to manageable levels by means of polygon reduction algorithms and cooking mesh LODs, professional applications such as CAD/CAM software must render the utmost detail and the entire geometry to maintain engineering integrity and accuracy.

Prior to Turing, NVIDIA’s Pascal architecture introduced a technique called Single Pass Stereo (SPS) to help accelerate geometry processing for VR.

SPS enables the GPU to draw at most two views simultaneously in a single pass and those views can only vary in the x-direction.

Headset Field Of View

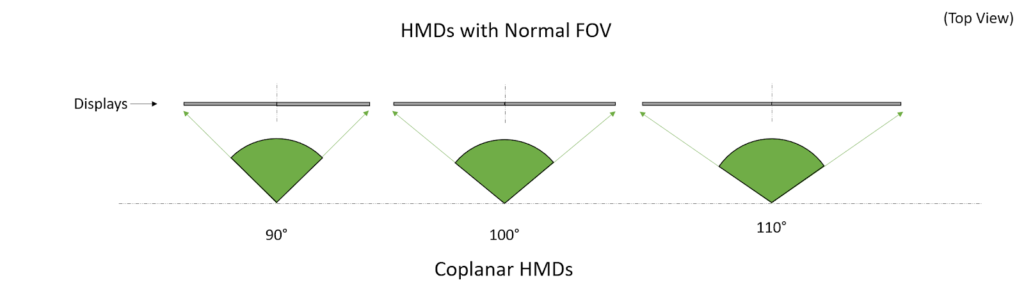

VR Headset developers are pushing hard to increase the field of view (FOV) of headsets. The greater the FOV, the more immersive the experience. Increasing the nominal horizontal FOV has been the general trend, as figure 2 shows.

As the FOV increases, the coplanar display size rapidly increases, making the headset construction bulky and non-ergonomic. Planar displays start becoming difficult to manage at or above 110° FOV.

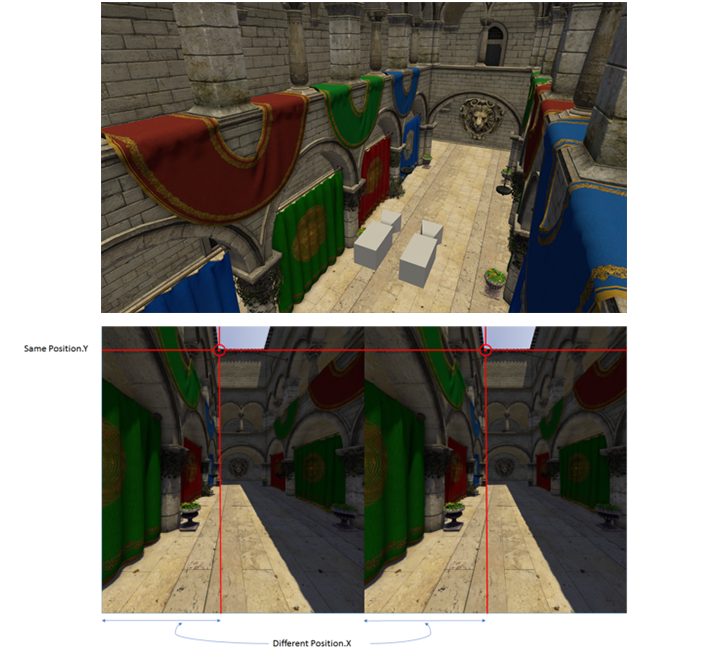

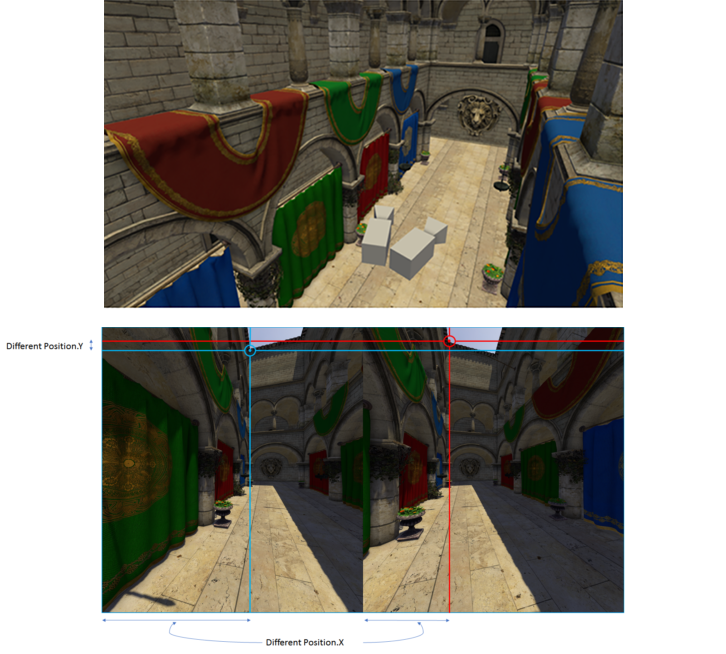

Cameras positioned for the two eye views are parallel to each other with standard (below 110°) FOVs. The Screen-space output of this scenario only differs in the X-component of the vertex positions, as shown in figure 3.

Pascal’s Single Pass Stereo (SPS) rendering technique worked perfectly for coplanar displays with limited FOVs.

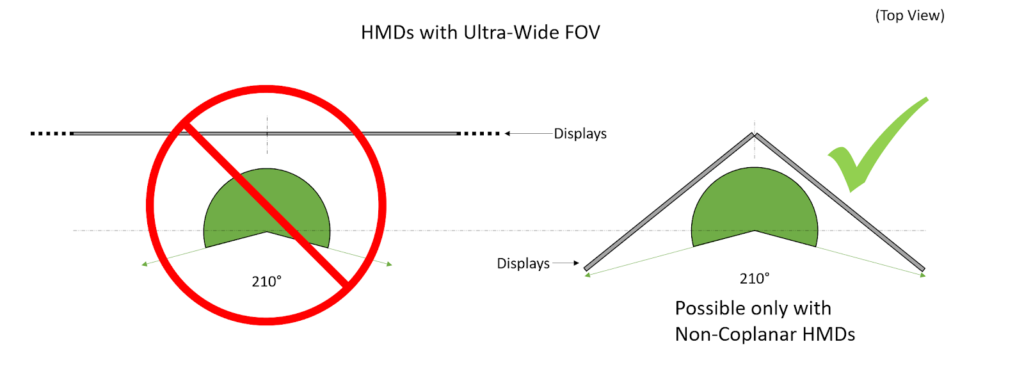

Headsets targeting 180°+ FOV enable more lifelike immersion in VR since humans naturally notice objects and movement in FOVs beyond 180°. Using co-planar displays to achieve these ultra-wide FOVs becomes impractical. Instead, a design with canted displays becomes necessary, as you can see in figure 4.

Canted displays mean the cameras of both the eyes are no longer parallel. Due to a perspective distortion in each view arising from each of the camera’s angles, the vertex positions differ in X as well as Y direction between each view. SPS developed for Pascal cannot be used in this viewport configuration. Figure 5 illustrates the problem.

Rendering with Up To 4 Views

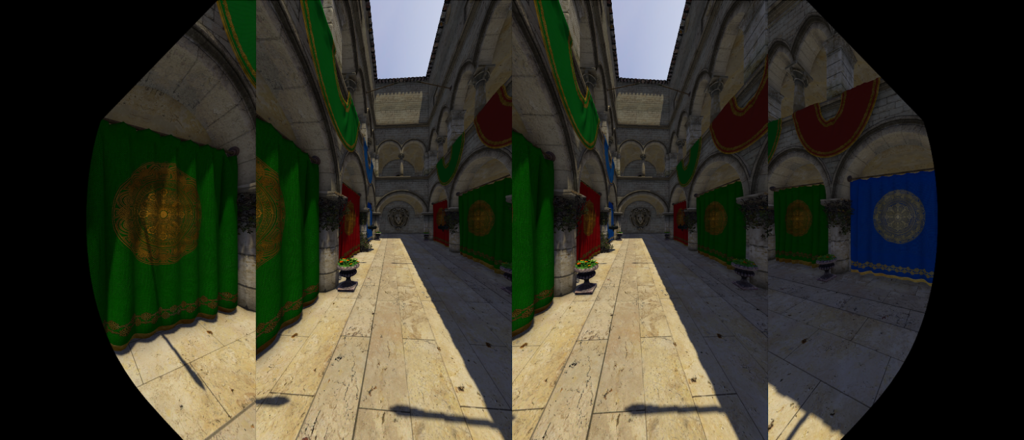

Two views with unique XYZW position components enable rendering content for Wide-FOV HMDs with canted displays. However, the content at the periphery suffers from heavy perspective distortion and thus lacks visual details. The solution here is splitting each of the two displays in the ultra-wide view further into two views. This way every part of the scene can be rendered with good pixel density and retains details even at the screen edges. One such rendered example can be seen in figure 6.

We can see the Left and Right views being further split into Left-side and Right-side to cover the outer periphery rendered with cameras rotated farther towards the outer edges of the viewfield. Some of the geometry overlap between the split views (Left and Left-side, for example) is needed for seamless warping and stitching the two split views into a single ultra-wide view.

Clearly, two views do not suffice for VR headsets with ultra-side FOV.

Turing Multi-View Rendering

Multi-view rendering supported by NVIDIA’s Turing GPU expands on Single Pass Stereo.

- MVR now supports rendering up to 4 views in a single pass

- MVR supports different XYZW components of the vertex positions of each view

- MVR supports the ability to set other generic attributes to be view-dependant apart from vertex position

MVR enables developers to utilize and extend the Simultaneous Multi-projection algorithm to Ultra-Wide FOV headsets with canted displays using up to 4 views.

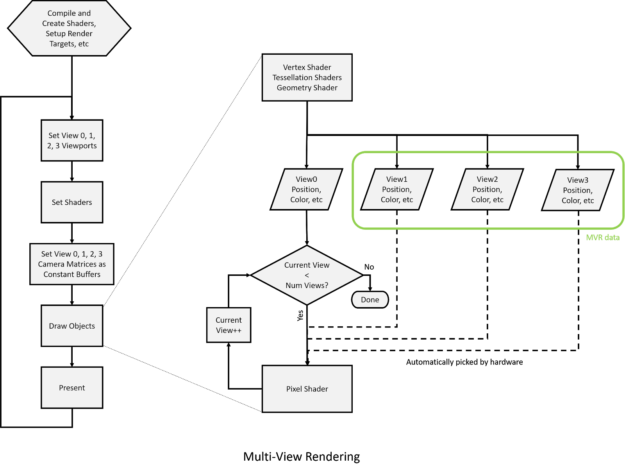

MVR allows a single invocation of Vertex, Tessellation and Geometry Shader stages to output position and other generic attributes for all views. The hardware then expands the rest of the pixel shading pipeline for each view. This way, geometry processing common for each view does not need repeating,which results in more efficient rendering. World-space shader stages hand off the position and attributes per view and the pixel shader repeats for each view.

MVR yields significant saving in both GPU and CPU cycles in geometry-bound rendering applications.

NVIDIA Multi-View Rendering API

The Multi-View Rendering API provides an interface for applications to enable rendering up to 4 views in single draw call. The programming involves specifying number of views, allocating render targets corresponding to them, and indicate attributes such as position or other view-independent attributes.

- Explicit Custom Semantic Model — app programs shader to specify view-specific values

- App creates the shaders using NvAPI wrappers

- App calls NvAPI to enable the MVR feature

- HW loops Rasterization and Pixel Shading “N” times for N views

You can query the feature support on current hardware setup using NvAPI_D3D_QueryMultiViewSupport. Based on the support, you can enable the feature by calling NvAPI_D3D_SetMultiViewMode.

View-dependent Attributes

Semantics are appended to the inter-shader variables declared in HLSL as an added string. Examples include: float4 Position: SV_POSITION, float4 Color: COLOR;

SV_POSITION is a System Semantic and has a special meaning already assigned to it from HLSL to DX shader assembly to the driver and hardware. However, COLOR in this case is more like a Generic Semantic. These are used as View0 attributes.

As mentioned before, MVR allows any generic attribute to be different in each of the views being rendered. It is often important for attributes such as camera-normals and motion vectors to be unique per view along with the vertex position itself. Such attributes which need to have a unique value per view are called view-dependent attributes. To provide values for these view-dependent attributes corresponding to View 0 / View 1 / View 2 / View 3, declare variables with semantics having _VIEW_#_SEMANTIC appended to the string provided in NvAPI. (The # corresponds to the view ID). Once a view-dependent attribute is populated per-view in a vertex, tessellation. and/or geometry shader, the pixel shader doesn’t need to know which view is being rendered. The pixel shader simply appears to read from “view0” variables and hardware does the job of picking the right version of the attribute.

// Application programming to set up rendering to multiple views // compile VS NvAPI_D3D11_CREATE_VERTEX_SHADER_EX CreateVertexShaderExArgs = {0}; ... CreateVertexShaderExArgs.NumCustomSemantics = 2; NV_CUSTOM_SEMANTIC customSemantics[] = { { NV_CUSTOM_SEMANTIC_VERSION, NV_POSITION_SEMANTIC, "NV_POSITION" }, { NV_CUSTOM_SEMANTIC_VERSION, NV_GENERIC_SEMANTIC, "NV_COLOR" }, }; CreateVertexShaderExArgs.pCustomSemantics = &customSemantics[0]; NvStatus = NvAPI_D3D11_CreateVertexShaderEx(..., &CreateVertexShaderExArgs, &pVertexShader); // similarly populate custom semantic information for other required shader types and create appropriate shaders NvStatus = NvAPI_D3D11_CreateHullShaderEx(...) NvStatus = NvAPI_D3D11_CreateDomainShaderEx(...) NvStatus = NvAPI_D3D11_CreateGeometryShaderEx_2(...) NvStatus = NvAPI_D3D11_CreatePixelShaderEx_2(...) while(...) { ... NvAPI_D3D11_SetNViewMode(/*up to 4 views*/) ... RenderFrame () { Loop(once) (single render for multiple views) { Draw(); // this will render to all views } }

// Application shader programming to support multiple views struct VSOut { float4 position_view0 : SV_POSITION; float4 position_view1 : NV_POSITION_VIEW_1_SEMANTIC; float4 position_view2 : NV_POSITION_VIEW_2_SEMANTIC; float4 position_view3 : NV_POSITION_VIEW_3_SEMANTIC; float4 color_view0 : NV_COLOR_VIEW_0_SEMANTIC; float4 color_view1 : NV_COLOR_VIEW_1_SEMANTIC; float4 color_view2 : NV_COLOR_VIEW_2_SEMANTIC; float4 color_view3 : NV_COLOR_VIEW_3_SEMANTIC; float2 tex : TEXCOORD0; }; VSOut VS(float4 position : POSITION, float2 tex : TEXCOORD) { VSOut output = (VSOut)0; output.position_view0 = position * VWP_0; output.position_view1 = position * VWP_1; output.position_view2 = position * VWP_2; output.position_view3 = position * VWP_3; output.color_view0 = RED; output.color_view1 = GREEN; output.color_view2 = BLUE; output.color_view3 = WHITE; output.tex = tex; // view-independent attribute } float4 PS(VSOut input) : SV_Target { // only access view-0 attributes or view-independent attributes // hardware will pick up appropriate attributes as per the current view return input.color_view0; }

Performance Considerations

When using Multi-View Rendering, the following factors affects potential the performance gains:

- Computational complexity of the vertex, tessellation, geometry shader; the more intensive the shaders, greater the gains

- Overall content in the scene i.e. denser geometry create greater the gains

- The more geometry overlaps in between different views, the better the performance

Easy access to API Programming

NVIDIA Multi-View Rendering is easy to integrate and significantly benefits geometry-heavy applications. Learn more about this exciting new techology on the MVR developer page.

The NVIDIA Multi-View Rendering interface APIs require R410 and above NVIDIA graphics drivers. The VRWorks Graphics SDK 3.0 release includes the API and sample applications along with programming guides for NVIDIA developers.

If you’re not currently an NVIDIA developer and want to check out VRWorks, signing up is easy — just click on the “join” button at the top of the main NVIDIA Developer Page.